Video Depth Anything: Enhanced Accuracy for Long Video Depth Estimation

Table Of Content

- What is Video Depth Anything?

- Video Depth Anything Overview:

- Examples of Video Depth Anything in Action

- Example 1: High-Action Scene

- Example 2: Complex Scene with Moving Camera

- Example 3: Chaotic and Shaky Scene

- Comparison with Competitors

- Example 1: Grass Details

- Example 2: Metal Fence Details

- How to Try Video Depth Anything?

- Using the Hugging Face Space

- Running the Tool Locally

- How to use Video-Depth-Anything using Github?

- Step 1: Preparation

- Step 2: Inference on a Video

- Impressive Performance on Long Videos

- Conclusion

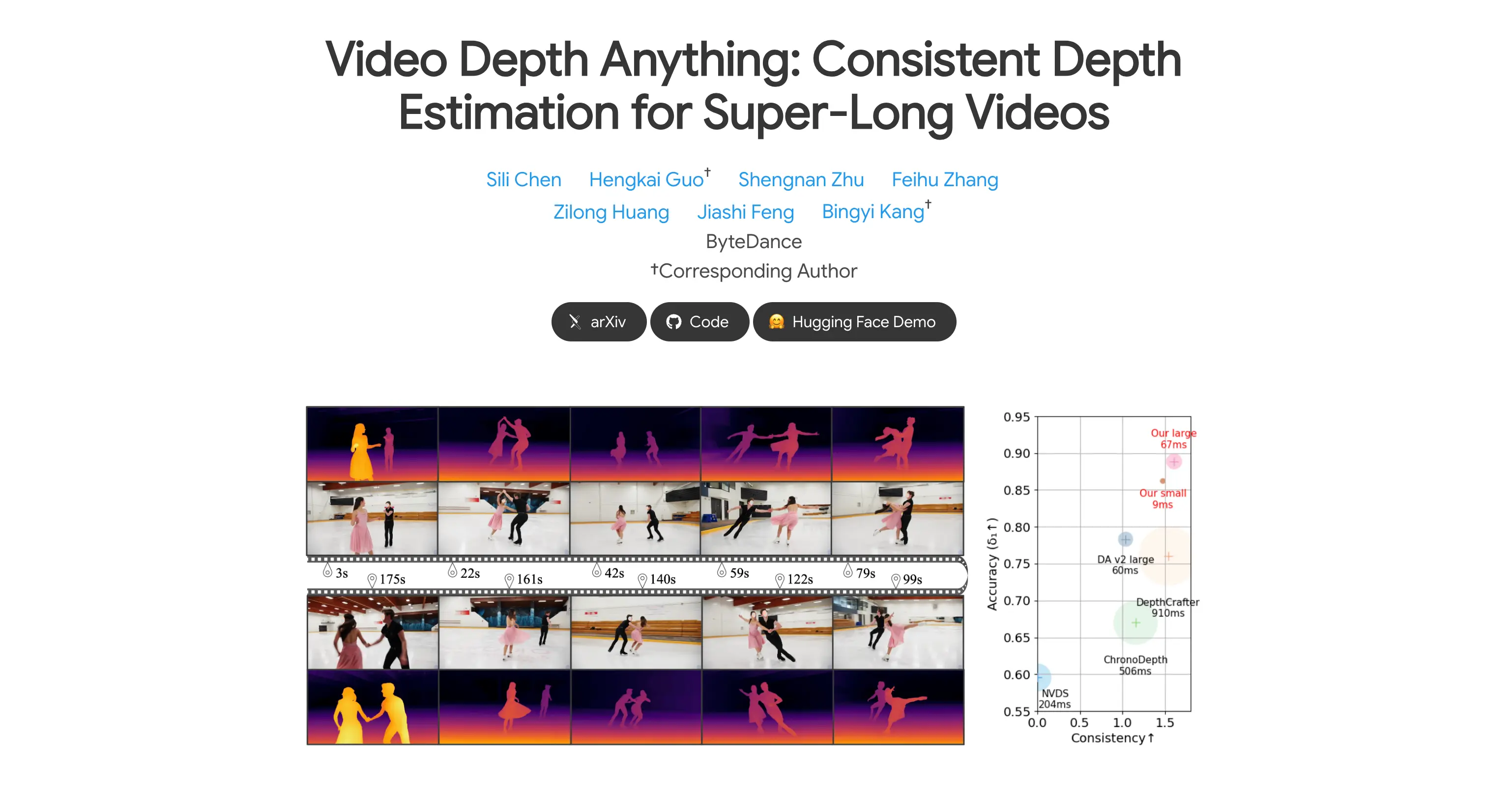

In this article, I’ll walk you through the details of a fascinating tool called Video Depth Anything, which specializes in estimating the depth of objects in long videos. This tool is an improvement over its predecessor, offering enhanced accuracy and performance, especially for longer and more complex video sequences.

What is Video Depth Anything?

Video Depth Anything is a tool designed to analyze long videos and estimate how far objects are from the camera. This process is known as depth estimation, and it’s particularly useful for creating depth videos. While there are other tools that can perform depth estimation, Video Depth Anything stands out because it’s specifically optimized for handling longer videos with greater accuracy.

This tool is based on an existing framework called Depth Anything, but it has been fine-tuned further to deliver even better results.

The improvements are evident in its ability to handle complex scenes, high-action sequences, and shaky camera movements while maintaining precision.

Video Depth Anything Overview:

| Feature | Details |

|---|---|

| Model Name | Video Depth Anything |

| Functionality | Long video depth estimation and 3D reconstruction |

| Paper | arxiv.org/abs/2501.12375 |

| Project Page | videodepthanything.github.io |

| GitHub Repository | github.com/DepthAnything/Video-Depth-Anything |

| Hugging Face Space | huggingface.co/spaces/depth-anything/Video-Depth-Anything |

| Input | RGB video sequences |

| Output | High-quality depth maps and 3D reconstructions |

Examples of Video Depth Anything in Action

To give you a better understanding of how this tool performs, let’s look at some examples.

Note that the videos shown in these examples are sped up by three times to save time, but the depth estimation remains highly accurate.

Example 1: High-Action Scene

In the first example, we see a high-action scene with a lot of movement. Video Depth Anything is able to estimate the depth of all objects in the video very accurately. This is particularly impressive because high-action scenes are often challenging for depth estimation tools.

Example 2: Complex Scene with Moving Camera

The second example features a complex scene where the camera is moving rapidly, and people are jumping around. Even in such a dynamic environment, the tool manages to estimate the depth of everything in the video with remarkable precision.

Example 3: Chaotic and Shaky Scene

In the third example, we have a chaotic scene with a shaky camera. Video Depth Anything captures the depth of all objects in the video very accurately. This demonstrates the tool’s robustness in handling less-than-ideal recording conditions.

Comparison with Competitors

To highlight the superiority of Video Depth Anything, let’s compare it with a competitor called Depth Crafter.

Example 1: Grass Details

In the first comparison, Depth Crafter is shown on the left, and Video Depth Anything is on the right. When you look at the grass, you’ll notice that the new tool produces much sharper and more detailed results compared to Depth Crafter, which appears blurrier.

Example 2: Metal Fence Details

In the second comparison, we focus on a metal fence. Depth Crafter’s output is blurry, while Video Depth Anything generates a highly accurate depth map of the fence. The difference in detail is striking, especially when you zoom in on specific areas.

These comparisons clearly demonstrate the advancements made in Video Depth Anything, making it a more reliable choice for depth estimation tasks.

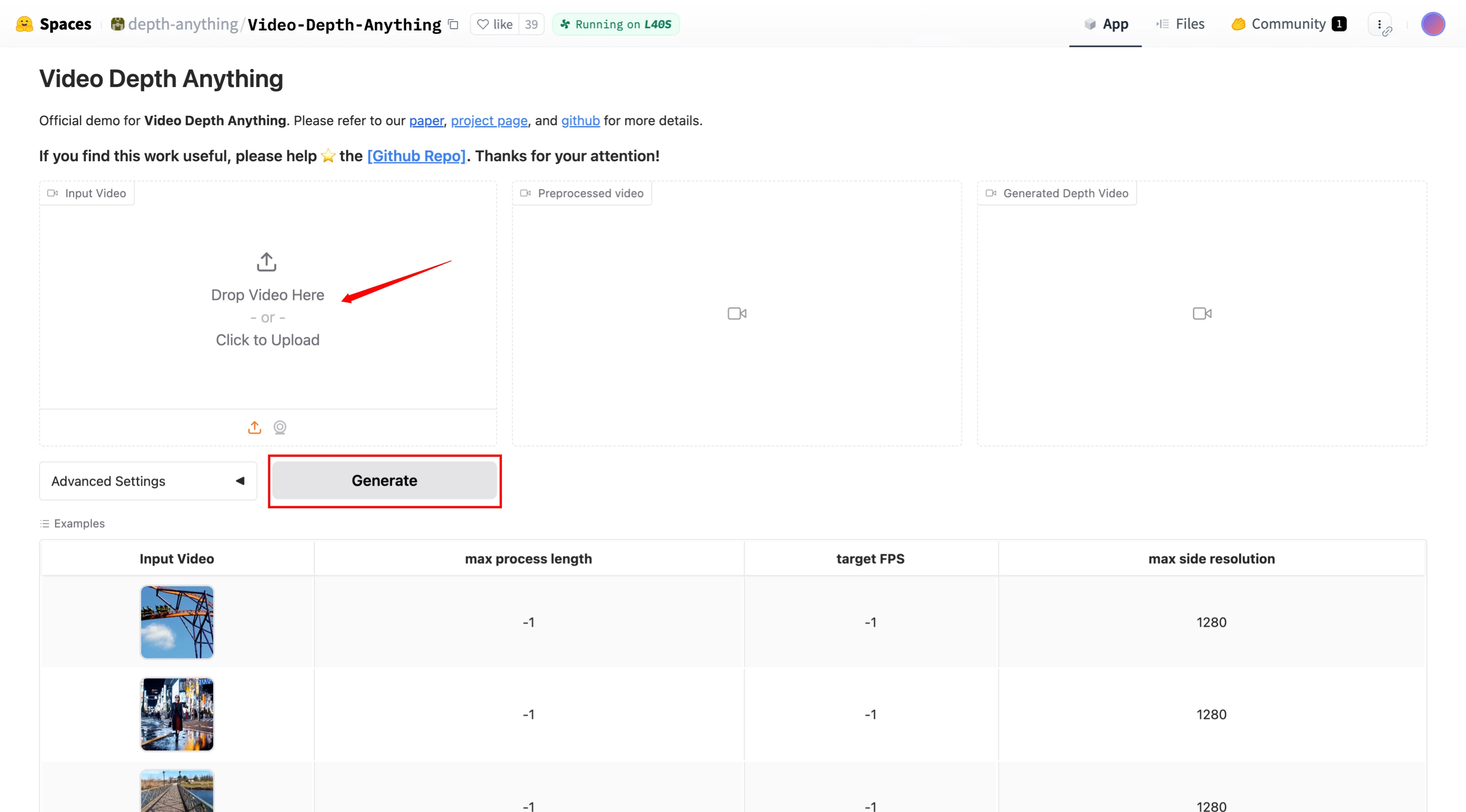

How to Try Video Depth Anything?

If you’re interested in testing this tool for yourself, the developers have made it incredibly accessible. They’ve released a GitHub repository containing all the instructions you need to download and run the tool on your computer.

Additionally, they’ve created a free Hugging Face space where you can try it out without any installation.

Using the Hugging Face Space

- Visit the Hugging Face space: huggingface.co/spaces/depth-anything/Video-Depth-Anything

- Upload your video.

- Click the Generate button.

- The tool will process the video and produce a depth video as the output.

Running the Tool Locally

If you prefer to run the tool locally, follow these steps:

- Clone the GitHub repository.

- Follow the instructions to set up the environment and download the necessary files.

- Run the tool using the provided scripts.

One of the standout features of Video Depth Anything is its efficiency.

The model sizes are relatively small, with the smaller version containing only 28 million parameters and the larger version having 381 million parameters. This makes it lightweight and easy to run on most systems.

How to use Video-Depth-Anything using Github?

Step 1: Preparation

-

Clone the Repository

Open your terminal and run the following command to clone the repository:git clone https://github.com/DepthAnything/Video-Depth-Anything -

Navigate to the Project Directory

Change into the cloned directory:cd Video-Depth-Anything -

Install Dependencies

Install the required Python packages usingpip:pip install -r requirements.txt -

Download Checkpoints Download the necessary model checkpoints and place them in the

checkpointsdirectory. You can use the provided script to download the weights:bash get_weights.shThis script will download the required weights and place them in the correct directory.

Step 2: Inference on a Video

-

Prepare Your Input Video

Place your input video in the./assets/example_videos/directory or specify the path to your video in the command. -

Run the Inference Script

Use therun.pyscript to process your video. For example:python3 run.py --input_video ./assets/example_videos/davis_rollercoaster.mp4 --output_dir ./outputs --encoder vitl--input_video: Path to the input video file.--output_dir: Directory where the output depth maps and processed video will be saved.--encoder: Choose the encoder model (vitlfor large,vitbfor base, orvitsfor small).

-

Check the Output

After the script finishes running, the output depth maps and processed video will be saved in the./outputsdirectory.

Impressive Performance on Long Videos

To further showcase the tool’s capabilities, let’s look at an example of a 28-second Ferris wheel video. Video Depth Anything processes the video and generates a consistent and accurate depth map.

Conclusion

Video Depth Anything is a powerful tool for depth estimation in long videos. Its enhanced accuracy, ability to handle complex and high-action scenes, and detailed output make it a significant improvement over previous tools like Depth Crafter.

With its availability on GitHub and Hugging Face, trying out Video Depth Anything is easier than ever.

Related Posts

3DTrajMaster: A Step-by-Step Guide to Video Motion Control

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.

Caracal AI: Free Tool for Handwritten Text Recognition, Extract text from Images

Caracal is a text recognition project that has been widely cloned and fine-tuned by users for specific purposes. The project leverages advanced technology for text recognition tasks, as highlighted in the provided transcript snippet.

Browser-Use Free AI Agent: Now AI Can control your Web Browser

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.