UI-TARS: Open Source AI Agent for Browser and Desktop Automation

Table Of Content

- What is UI-TARS: The AI Agent for Computer Control

- UI-TARS AI Desktop Agent Overview:

- Key Features of UI-TARS

- What Can UI-TARS Do?

- 1. **Browser Automation**

- Example 1: Checking the Weather

- Example 2: Sending a Tweet

- Example 3: Finding Roundtrip Flights

- 2. **Desktop Automation**

- Example 1: Editing a PowerPoint Presentation

- Example 2: Installing an Extension in VS Code

- Why UI-TARS Stands Out

- 1. **Open Source and Free**

- 2. **Iterative Learning**

- 3. **State-of-the-Art Performance**

- How to Get Started with UI-TARS

- Using UI-TARS Locally

- Configuring the Model

- Quick Start Guide: UI-TARS Desktop

- Download

- Install

- Deployment

- Development

- System Requirements

- How to Use UI-TARS Space on HuggingFace?

- Final Thoughts

This week, I came across an impressive open-source AI agent called UI-TARS. It’s a free tool that offers both a browser agent and a desktop agent, making it incredibly versatile. The browser agent works within your internet browser, while the desktop agent can interact with your entire computer, not just the browser.

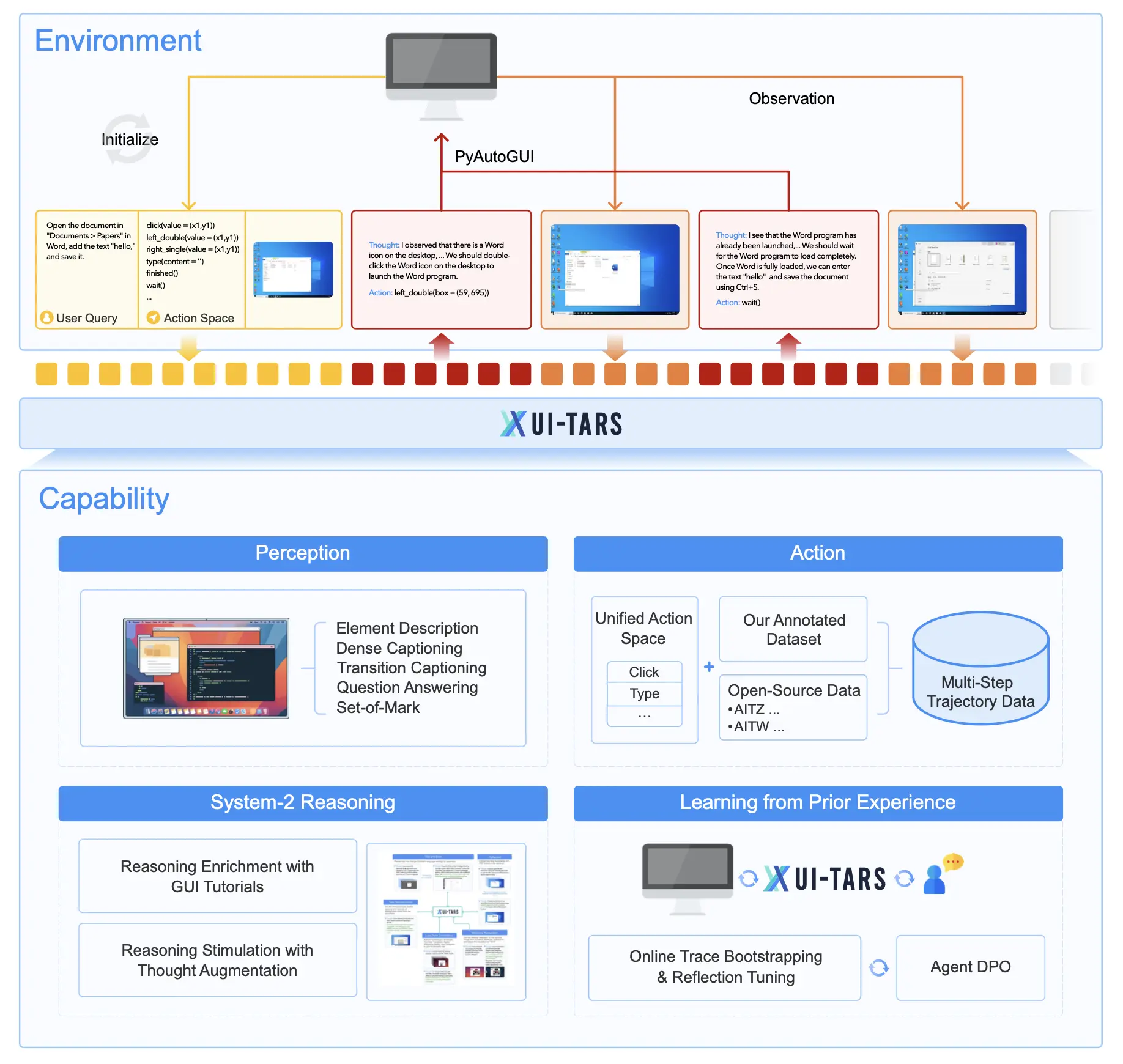

What is UI-TARS: The AI Agent for Computer Control

UI-TARS is an AI model that you can pair with any implementation capable of controlling a computer, such as browser use or other applications. It also comes with its own implementation, which is open-source.

The model is available in three sizes: 2B, 7B, and 72B. These models are specially trained for tasks involving computer control, detecting what’s on the screen, and predicting the next steps.

UI-TARS AI Desktop Agent Overview:

| Feature | Details |

|---|---|

| Model Name | UI-TARS |

| Functionality | AI agent for browser and desktop automation |

| Paper | arxiv.org/abs/2501.12326 |

| Usage Options | Hugging Face Demo, Local Installation |

| Hugging Face Space | huggingface.co/spaces/bytedance-research/UI-TARS |

| GitHub Repository | github.com/bytedance/UI-TARS-desktop |

| Discord | discord.gg/txAE43ps |

Key Features of UI-TARS

- Vision Model: UI-TARS is a vision model, which means it can interpret and interact with visual data on your screen.

- Benchmarks: The 7B model outperforms previous soda models, and the 72B model takes an even bigger leap in performance.

- Performance: It beats Claude 3.5 Sonet in computer usage tasks, which is impressive.

- Fine-Tuned Model: UI-TARS is based on or fine-tuned from the Quen 2 VL model, which is already known for its excellence in vision tasks.

What Can UI-TARS Do?

1. Browser Automation

UI-TARS can automate tasks in your web browser with ease. Here are a few examples:

Example 1: Checking the Weather

I prompted UI-TARS with the task: “Get the current weather in San Francisco using the web browser.” Here’s what happened:

- It automatically opened Google Chrome.

- Typed in “weather in San Francisco”.

- Analyzed the screen and outputted the answer in the chat interface.

This is a simple example, but it shows how UI-TARS can handle basic browser tasks effortlessly.

Example 2: Sending a Tweet

Next, I tried a slightly more complex task: “Send a Twitter with the content ‘Hello World.’” Here’s how it worked:

- It opened Google Chrome and navigated to twitter.com.

- Typed out the tweet and posted it automatically.

This demonstrates how UI-TARS can automate social media tasks, saving you time and effort.

Example 3: Finding Roundtrip Flights

One of the cooler examples is finding roundtrip flights. I prompted it with: “Find roundtrip flights from Seattle to New York with a set departure and return date.” Here’s what it did:

- Searched for the departure and destination airports.

- Opened the date picker and selected the departure and return dates.

- Clicked on the search button.

What’s impressive is that UI-TARS shows its reasoning step-by-step in the chat interface. For instance, if the page doesn’t load fast enough, it waits for the page to fully load before proceeding. This is a significant improvement over other agents I’ve tested, which often get stuck in such situations.

After the page loaded, it clicked on the sort and filter dropdown and sorted the results by price. This level of detail and adaptability makes UI-TARS a powerful tool for automating complex tasks.

You can watch more demo videos and check out the project at github.com/bytedance/UI-TARS.

2. Desktop Automation

UI-TARS isn’t limited to browsers; it can also interact with desktop applications like Microsoft Word, PowerPoint, and VS Code.

Example 1: Editing a PowerPoint Presentation

I tested UI-TARS with the prompt: “Make the background color of slide 2 the same as the color of the title from slide 1.” Here’s how it handled the task:

- Selected slide 2 from the sidebar.

- Accessed the background color settings.

- Chose the red color from the color palette to match the title color from slide 1.

This example shows how UI-TARS can assist with tasks in productivity software, making it a valuable tool for professionals.

Example 2: Installing an Extension in VS Code

Another useful demonstration involved installing an extension in VS Code. The prompt was: “Please help me install the AutoDocstring extension in VS Code in the sidebar.” Here’s what happened:

- It opened VS Code.

- Waited for the application to fully load before proceeding.

- Accessed the extensions view.

- Typed “AutoDocstring” in the search bar.

- Clicked the install button to install the extension.

This example highlights UI-TARS’ ability to handle tasks in development environments, making it a handy tool for programmers.

Why UI-TARS Stands Out

1. Open Source and Free

UI-TARS is completely free and open source, which is a huge advantage. Unlike other tools like Claude Computer Use, which is expensive and closed source, UI-TARS gives you full control over its functionality.

2. Iterative Learning

One of the coolest features of UI-TARS is its ability to learn iteratively. It uses a method called reflection tuning to learn from its mistakes and adapt to new situations. This makes it more reliable and efficient over time.

3. State-of-the-Art Performance

UI-TARS outperforms other models in various benchmarks. For example:

- It beats the previous top performer by over 40% in some benchmarks.

- It consistently outperforms GPT-4 and Claude across multiple metrics.

How to Get Started with UI-TARS

UI-TARS is available on Hugging Face, and all the models are ready for download. Here’s what you need to know:

- License: It’s under the Apache 2 license, which means you can edit, tweak, and even use it for commercial purposes with minimal restrictions.

- Models: There are two versions available:

- A 72-billion parameter model for high-end GPUs.

- A 7-billion parameter model for lower-grade GPUs.

You can find all the instructions for downloading and using UI-TARS offline on your computer in the Hugging Face repository. I’ll link to the page in the description below for easy access.

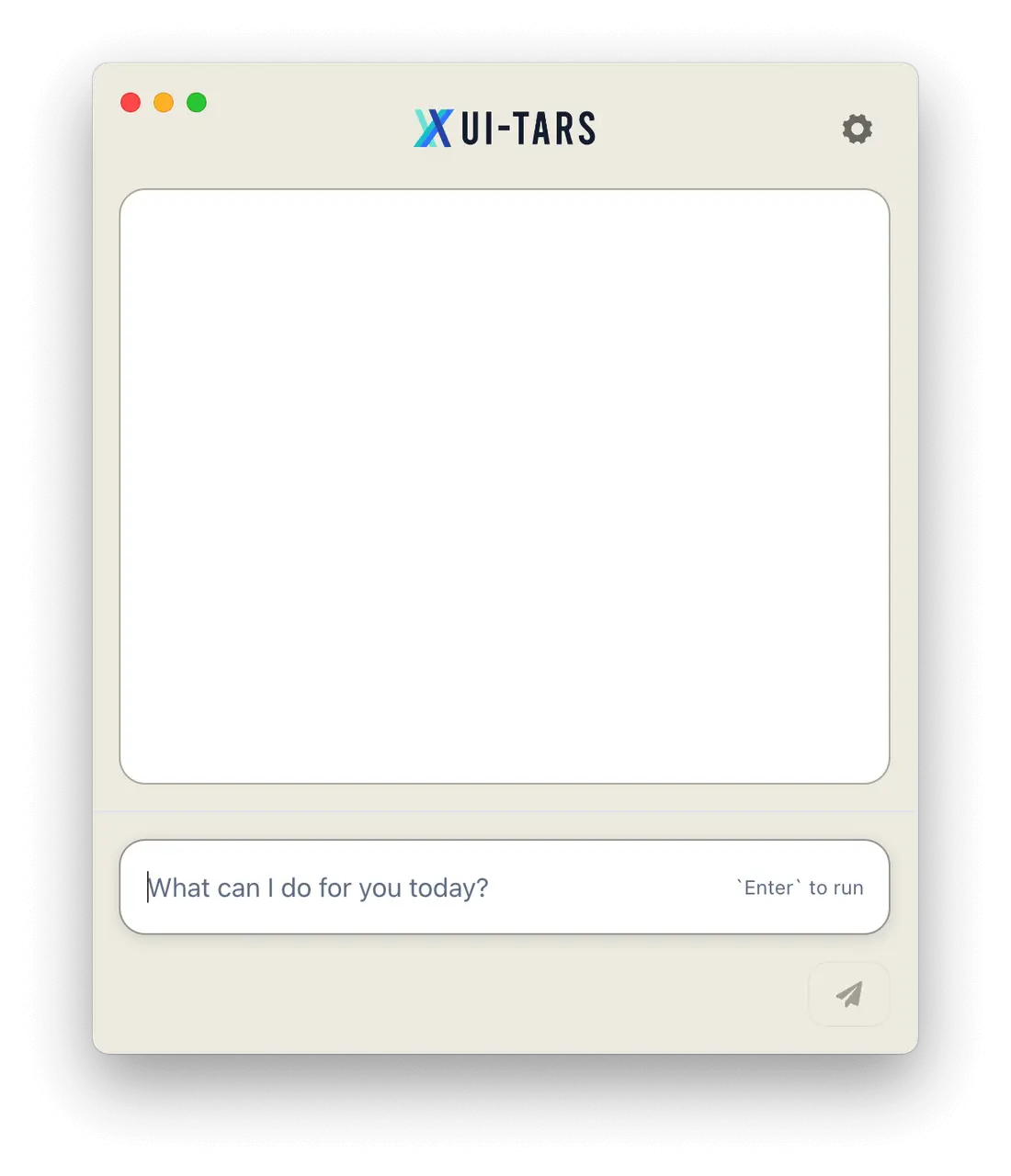

Using UI-TARS Locally

UI-TARS comes with its own open-source implementation.

- Clone the Repository: Start by cloning the UI-TARS repository to your computer.

- Install Packages: Navigate to the folder and run

pnpm installto install the required packages. - Run the Model: Use the command

pnpm runevto get everything running.

Once set up, the interface is straightforward. You can send prompts, and the model will start controlling your computer accordingly.

Configuring the Model

- Settings: In the settings option, enter the base URL of Al or VM, along with the model name.

- Vision Support: You can also use UI-TARS with any other model that supports vision tasks.

Quick Start Guide: UI-TARS Desktop

Download

- Get the latest version from the releases page.

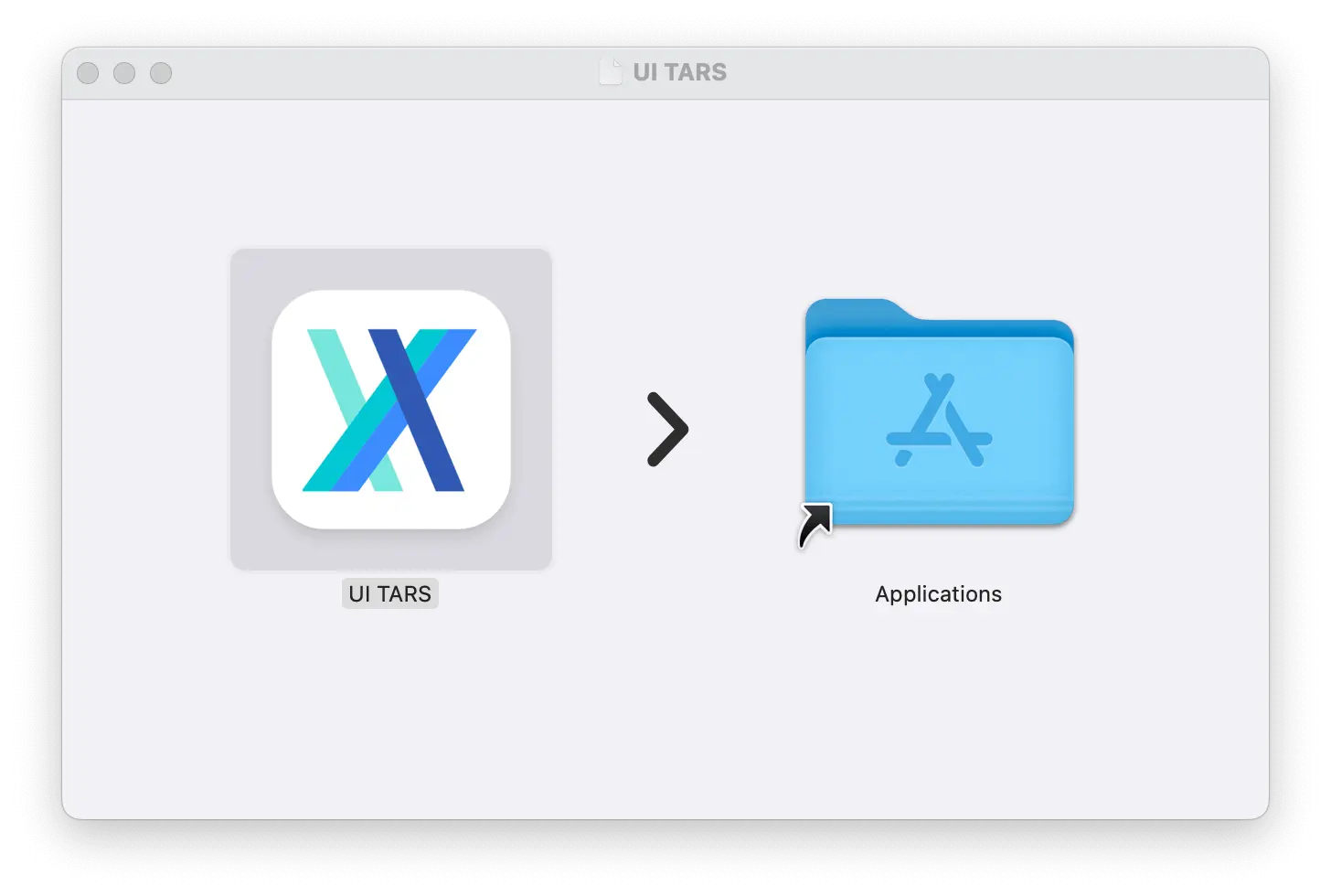

Install

MacOS

- Drag

UI TARS.appinto the Applications folder.

- Enable permissions:

- System Settings → Privacy & Security → Accessibility (add UI TARS).

- System Settings → Privacy & Security → Screen Recording (enable for UI TARS).

- Open the app.

Windows

- Run the downloaded

.exefile to launch the app.

Deployment

Cloud (Recommended)

- Deploy via HuggingFace Inference Endpoints.

Local (vLLM)

- Install dependencies:

pip install -U transformers VLLM_VERSION=0.6.6 CUDA_VERSION=cu124 pip install vllm==${VLLM_VERSION} --extra-index-url https://download.pytorch.org/whl/${CUDA_VERSION} - Download Model (pick one):

- 2B-SFT | 7B-SFT | 7B-DPO (recommended for most users)

- 72B-SFT | 72B-DPO (requires high-end hardware)

- Start the API service:

python -m vllm.entrypoints.openai.api_server --served-model-name ui-tars --model <model-path>

Development

- Install dependencies:

pnpm install - Run the app:

pnpm run dev

Testing

- Unit tests:

pnpm run test - End-to-end tests:

pnpm run test:e2e

System Requirements

- Node.js ≥ 20

- Supported OS: Windows 10/11, macOS 10.15+.

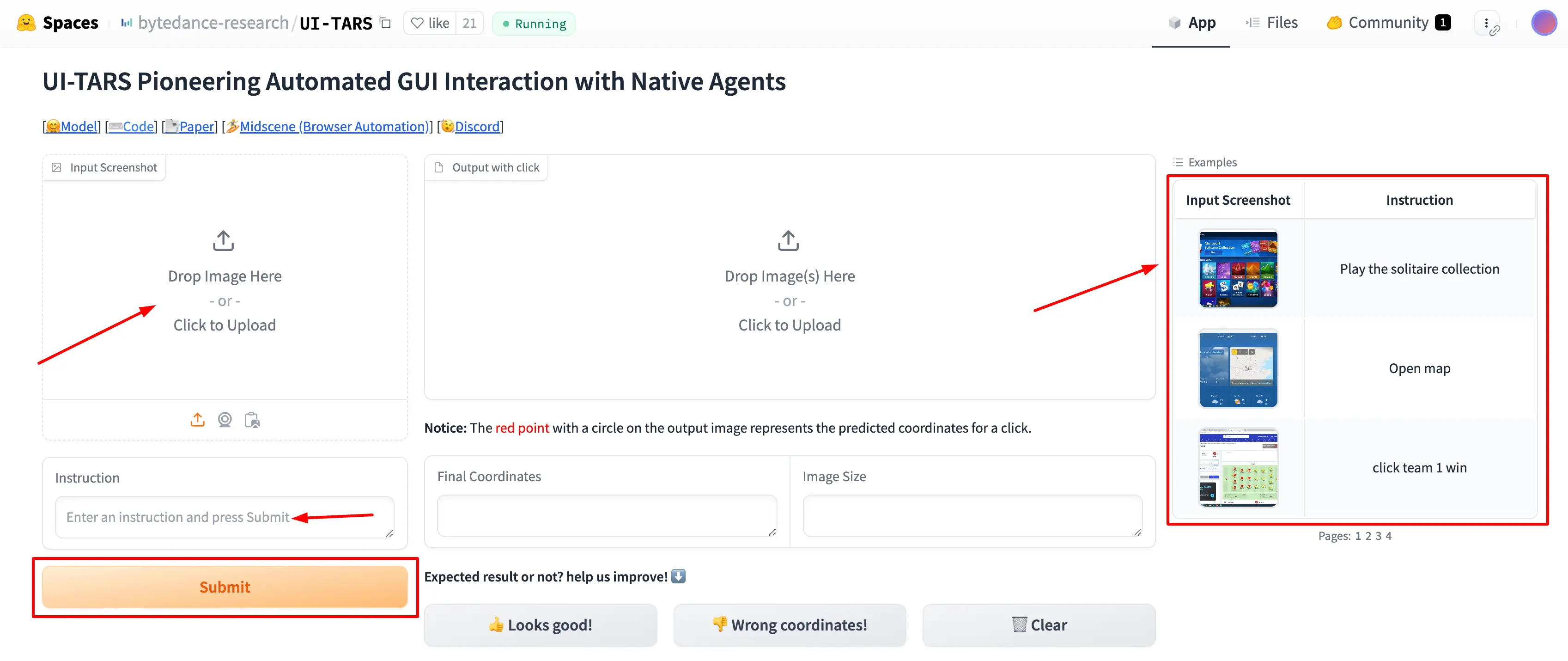

How to Use UI-TARS Space on HuggingFace?

Based on the provided image, here's a simple guide to using the UI-TARS Space:

-

Input Screenshot:

- Drag and drop or upload a screenshot of the GUI you want to interact with.

-

Output with Click:

- Upload the image(s) where you want the model to predict click coordinates.

- The red circle on the output image indicates the predicted click location.

-

Enter Instruction:

- Type your instruction (e.g., "Click the login button") in the provided field and press Submit.

-

Review Results:

- Check the Final Coordinates and Image Size in the table.

- Provide feedback on whether the result matches your expectation to help improve the model.

Final Thoughts

UI-TARS is a powerful, open-source AI agent that can automate tasks in both your browser and desktop applications. Its ability to handle complex tasks, learn from mistakes, and outperform other models makes it a standout tool.

I highly recommend trying it out, especially if you’re currently using more expensive alternatives like Claude.

Related Posts

3DTrajMaster: A Step-by-Step Guide to Video Motion Control

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.

Caracal AI: Free Tool for Handwritten Text Recognition, Extract text from Images

Caracal is a text recognition project that has been widely cloned and fine-tuned by users for specific purposes. The project leverages advanced technology for text recognition tasks, as highlighted in the provided transcript snippet.

Browser-Use Free AI Agent: Now AI Can control your Web Browser

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.