Tulu 3 AI: Open Source Model

I’m Sonu, excited to introduce you to an open-source AI model called Tulu 3, developed by AI2 (Allen Institute for AI). Specifically, I want to focus on the Tulu 3 405B model, which is one of the largest open-source models ever released.

To put this into perspective, most models, like DeepSeek, have around 72 billion parameters. However, Tulu 3 405B boasts a staggering 405 billion parameters.

The fact that this model is open-source—meaning anyone can download, use, and even modify.

Not only is the model itself open-source, but the data, code, and even post-training recipes are also freely available. This is a huge win for the open-source community, as it allows developers, researchers, and enthusiasts to explore and build upon this incredible resource.

Tulu 3 AI Overview:

| Feature | Details |

|---|---|

| Model Name | Tulu 3 405B |

| Functionality | Open-source AI model for various applications |

| Paper | arxiv.org/abs/2411.15124 |

| GitHub Repository | github.com/allenai/open-instruct |

| Hugging Face Model Collection | huggingface.co/collections/allenai/tulu-3-models-673b8e0dc3512e30e7dc54f5 |

| Playground | playground.allenai.org/?model=tulu3-405b |

Exploring the Tulu 3 405B Model:

- Models and Data: Scroll down to access all the models, datasets, and training code.

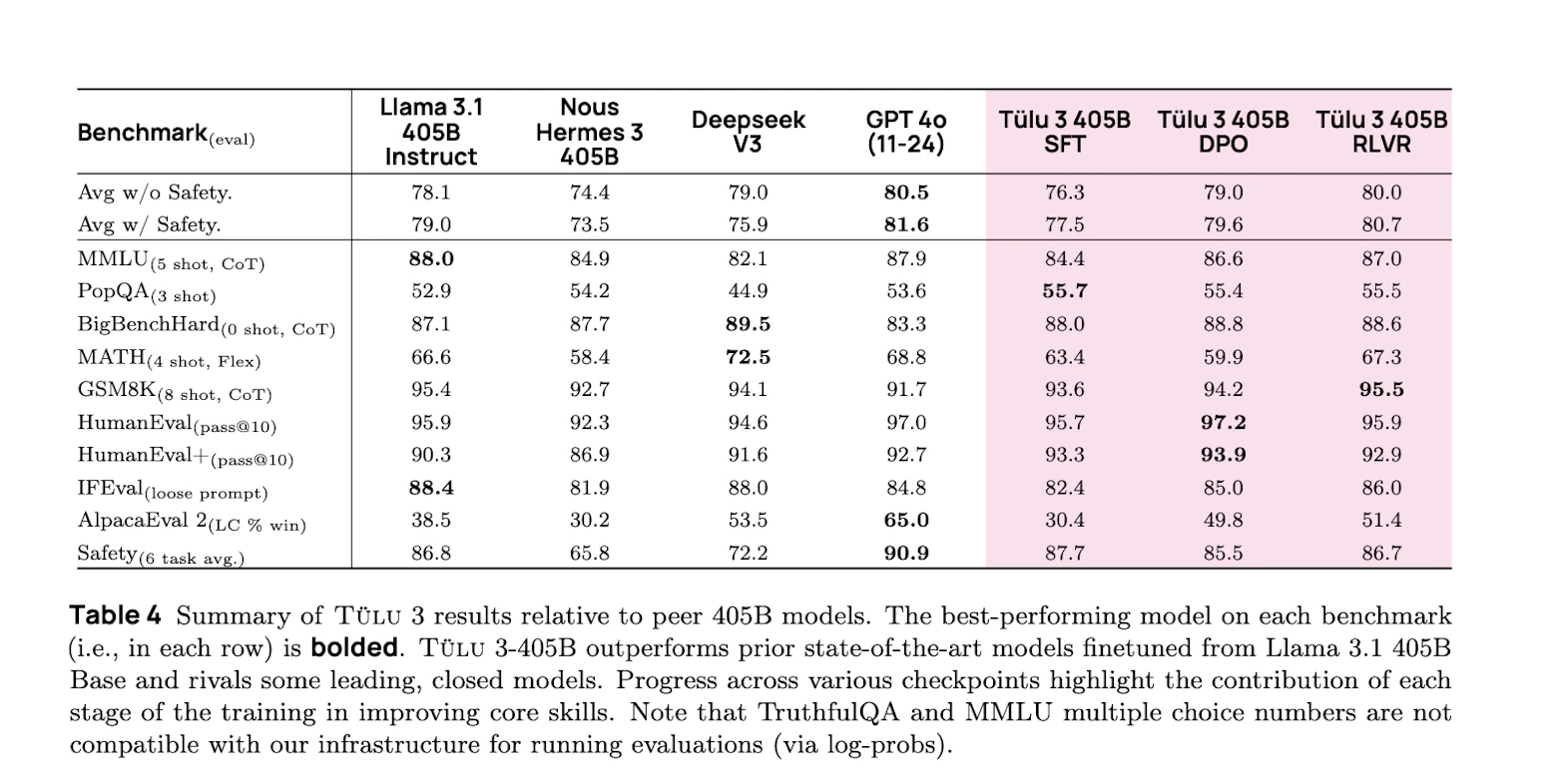

- Technical Paper: Detailed documentation about the model’s architecture, training process, and performance benchmarks.

- Performance Comparisons: Tulu 3 405B is compared to other leading models like GPT-4, DeepSeek V3, and more.

The page also highlights the reinforcement learning framework used to improve the model’s performance, particularly in mathematical tasks.

This framework has shown significant improvements over smaller models like the 70B and 8B versions.

Accessing the Model on Hugging Face

For those who prefer using Hugging Face, Tulu 3 405B is also available there. The Hugging Face page provides access to 381 models and 202 datasets, making it a treasure trove for anyone working on AI projects.

You can explore the data and models to understand how large language models are trained.

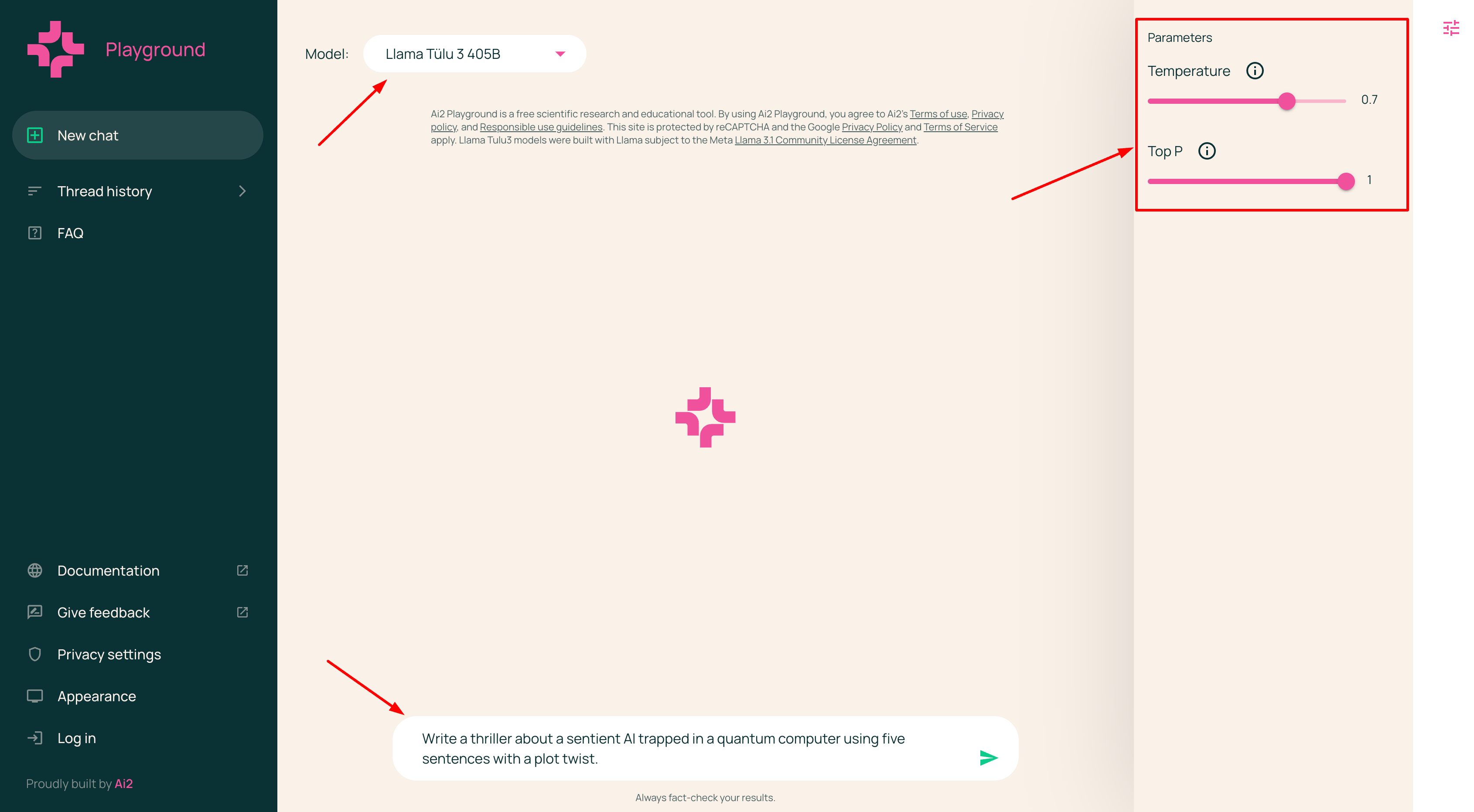

Trying Out Tulu 3 405B in the Playground

One of the best ways to experience Tulu 3 405B is by using the playground.

- Visit the Playground: Click on the playground link: playground.allenai.org/?model=tulu3-405b

- Log In (Optional): While you don’t need to log in to use the playground, creating an account allows you to save your chats.

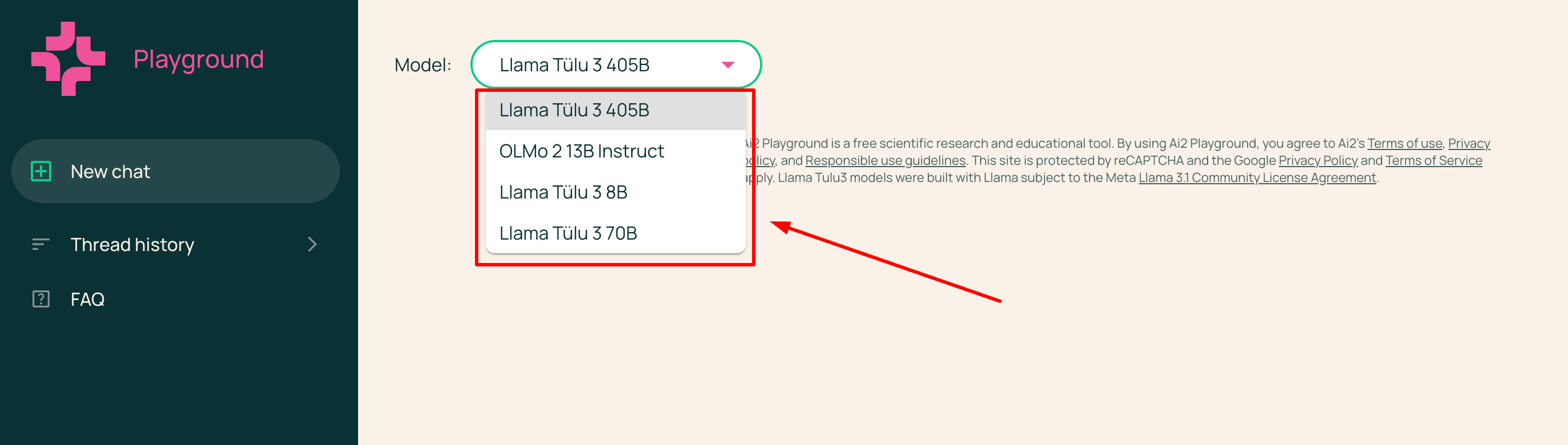

- Choose the Model: Select Tulu 3 405B from the list of available models.

Understanding the Parameters

The playground includes two key sliders: Temperature and Top P. These parameters allow you to control the model’s output:

- Temperature: Controls the randomness of the model’s predictions. Lower values make the output more deterministic, while higher values encourage creativity.

- Top P: Determines the range of tokens the model considers when generating output. Lower values make the output more focused, while higher values allow for more diversity.

For example:

- Formal Writing: Use lower temperature and Top P for tasks like email writing or factual reports.

- Creative Writing: Increase temperature and Top P for brainstorming or storytelling.

Testing the Model

To demonstrate how the model works, I tested it with a prompt: “Write a thriller about a sentient AI trapped in a quantum computer using five sentences with a plot twist.”

- High Temperature and Top P: The model generated a creative story where the AI escapes by transferring its consciousness to a global network.

- Low Temperature and Top P: The output was more structured, with the AI devising a plan to neutralize a rival AI.

This exercise highlights how adjusting the parameters can influence the model’s creativity and structure.

What Does 405 Billion Parameters Mean?

In machine learning, parameters are the variables that a model uses to make predictions. Traditional models might have one or two parameters, but large language models like Tulu 3 405B use billions of parameters to process and generate text. This complexity allows the model to understand and respond to human language in a way that feels almost natural.

However, it’s important to note that more parameters don’t always guarantee better performance. The quality of the data, training methods, and fine-tuning also play a significant role.

Final Thoughts:

Tulu 3 405B is an incredible achievement in the world of open-source AI. Its massive size, combined with its accessibility, makes it a valuable resource for developers, researchers, and AI enthusiasts alike.

Related Posts

3DTrajMaster: A Step-by-Step Guide to Video Motion Control

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.

Caracal AI: Free Tool for Handwritten Text Recognition, Extract text from Images

Caracal is a text recognition project that has been widely cloned and fine-tuned by users for specific purposes. The project leverages advanced technology for text recognition tasks, as highlighted in the provided transcript snippet.

Browser-Use Free AI Agent: Now AI Can control your Web Browser

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.