Tencent Hunyuan3D-2.0: Convert 2D to 3D of Any Image

Table Of Content

- What is Hunyuan3D 2.0?

- Hunyuan3D 2 Overview

- Architecture of Hunyuan3D 2.0

- Getting Started with Hunyuan3D-2.0

- Installation Requirements

- API Usage

- Shape Generation (Hunyuan3D-DiT)

- Texture Synthesis (Hunyuan3D-Paint)

- Generate a mesh first

- Apply texture

- Gradio App

- How to use Hunyuan3D-2 on huggingface?

- **Step 1: Access the Tool**

- **Step 2: Choose Your Input Type**

- **Step 3: Configure Advanced Settings (Optional)**

- **Step 4: Generate the 3D Model**

- **Step 5: Review and Download**

- **Additional Features**

- **Tips for Better Results**

- Key Features of Hunyuan3D-2

- Texture Customization

- Leaderboard Performance

- Open-Source Plan

- Accessibility and Open-Source Availability

- Free to Try

- Open-Source Release

- Model Size and Hardware Requirements

- Demonstration of Hunyuan3D-2.0

- Performance and Leaderboard

- Testing Hunyuan3D-2.0

- Conclusion

In this article, I will walk you through Tencent Hunyuan3D-2.0, a powerful large-scale 3D asset creation system that generates high-quality textured 3D assets. This system is designed to decouple geometry and texture generation, resulting in more detailed geometric structures and richer texture colors.

What is Hunyuan3D 2.0?

Hunyuan3D-2.0 is a two-stage 3D asset creation system that separates the generation of geometry and texture. This approach allows for greater flexibility and precision in creating 3D models.

The system consists of two main components:

- Foundation Shape Generative Model: Built on a scalable diffusion transformer architecture, this model creates geometry that aligns with specific conditions, providing a solid foundation for various downstream applications.

- Large-Scale Texture Synthesis Model: This model produces high-resolution and vibrant texture maps. It is designed to work with both generated and real-world meshes, making it highly versatile.

By decoupling geometry and texture generation, Hunyuan3D-2.0 ensures that both aspects of the 3D model are optimized independently, leading to superior results.

Hunyuan3D 2 Overview

| Feature | Details |

|---|---|

| Model Name | Hunyuan3D-2.0 |

| Functionality | Converts 2D images to high-quality 3D models with textures |

| Paper | arxiv.org/abs/2501.12202 |

| Usage Options | Hugging Face Demo, Local Installation |

| Hugging Face Space | huggingface.co/spaces/tencent/Hunyuan3D-2 |

| GitHub Repository | github.com/Tencent/Hunyuan3D-2 |

| Official Website | 3d-models.hunyuan.tencent.com/ |

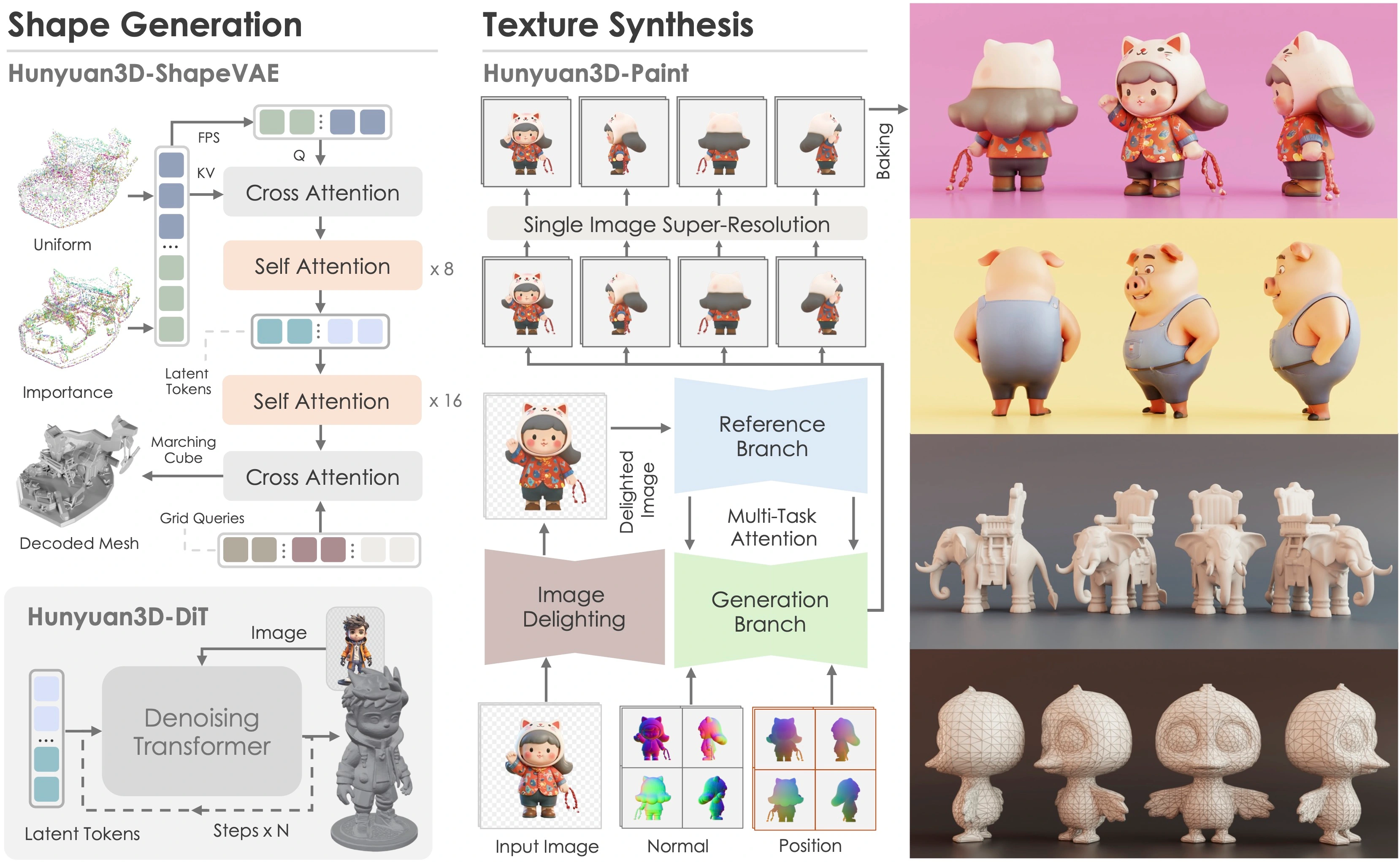

Architecture of Hunyuan3D 2.0

The architecture of Hunyuan3D-2.0 is based on a two-stage generation pipeline:

- Bare Mesh Creation: The first stage involves generating a bare mesh, which serves as the foundation for the 3D model.

- Texture Map Synthesis: The second stage focuses on creating a texture map for the mesh. This step allows for the application of detailed and vibrant textures to the generated or handcrafted mesh.

This two-stage approach effectively decouples the challenges of shape and texture generation, providing flexibility and precision in the creation process.

Getting Started with Hunyuan3D-2.0

To use Hunyuan3D-2.0, you can follow these steps via code or the Gradio App. Below, I’ll guide you through the installation and usage process.

Installation Requirements

Before you begin, ensure that you have the necessary software installed:

- Install PyTorch: Visit the official PyTorch website and install the appropriate version for your system.

- Install Other Requirements: Use the following command to install the required dependencies:

pip install -r requirements.txt - Texture Generation Setup:

- Navigate to the texture generation directory:

cd hy3dgen/texgen/custom_rasterizer python3 setup.py install cd ../../.. - For the differentiable renderer, run:

(On Windows, use

cd hy3dgen/texgen/differentiable_renderer bash compile_mesh_painter.shpython3 setup.py installinstead.)

- Navigate to the texture generation directory:

API Usage

Hunyuan3D-2.0 provides a diffusers-like API for both shape generation and texture synthesis. Here’s how you can use it:

Shape Generation (Hunyuan3D-DiT)

To generate a 3D shape, use the following code:

from hy3dgen.shapegen import Hunyuan3DDiTFlowMatchingPipeline

pipeline = Hunyuan3DDiTFlowMatchingPipeline.from_pretrained('tencent/Hunyuan3D-2')

mesh = pipeline(image='assets/demo.png')[0]The output mesh is a trimesh object, which can be saved in formats like .glb or .obj.

Texture Synthesis (Hunyuan3D-Paint)

To apply textures to the generated mesh, use the following code:

from hy3dgen.texgen import Hunyuan3DPaintPipeline

from hy3dgen.shapegen import Hunyuan3DDiTFlowMatchingPipeline

# Generate a mesh first

pipeline = Hunyuan3DDiTFlowMatchingPipeline.from_pretrained('tencent/Hunyuan3D-2')

mesh = pipeline(image='assets/demo.png')[0]

# Apply texture

pipeline = Hunyuan3DPaintPipeline.from_pretrained('tencent/Hunyuan3D-2')

mesh = pipeline(mesh, image='assets/demo.png')For more advanced usage, such as text-to-3D generation or texture generation for handcrafted meshes, refer to the minimal_demo.py script.

Gradio App

You can also host a Gradio App on your local machine by running:

python3 gradio_app.pyAlternatively, you can visit the Hunyuan3D platform for quick access without hosting the app yourself.

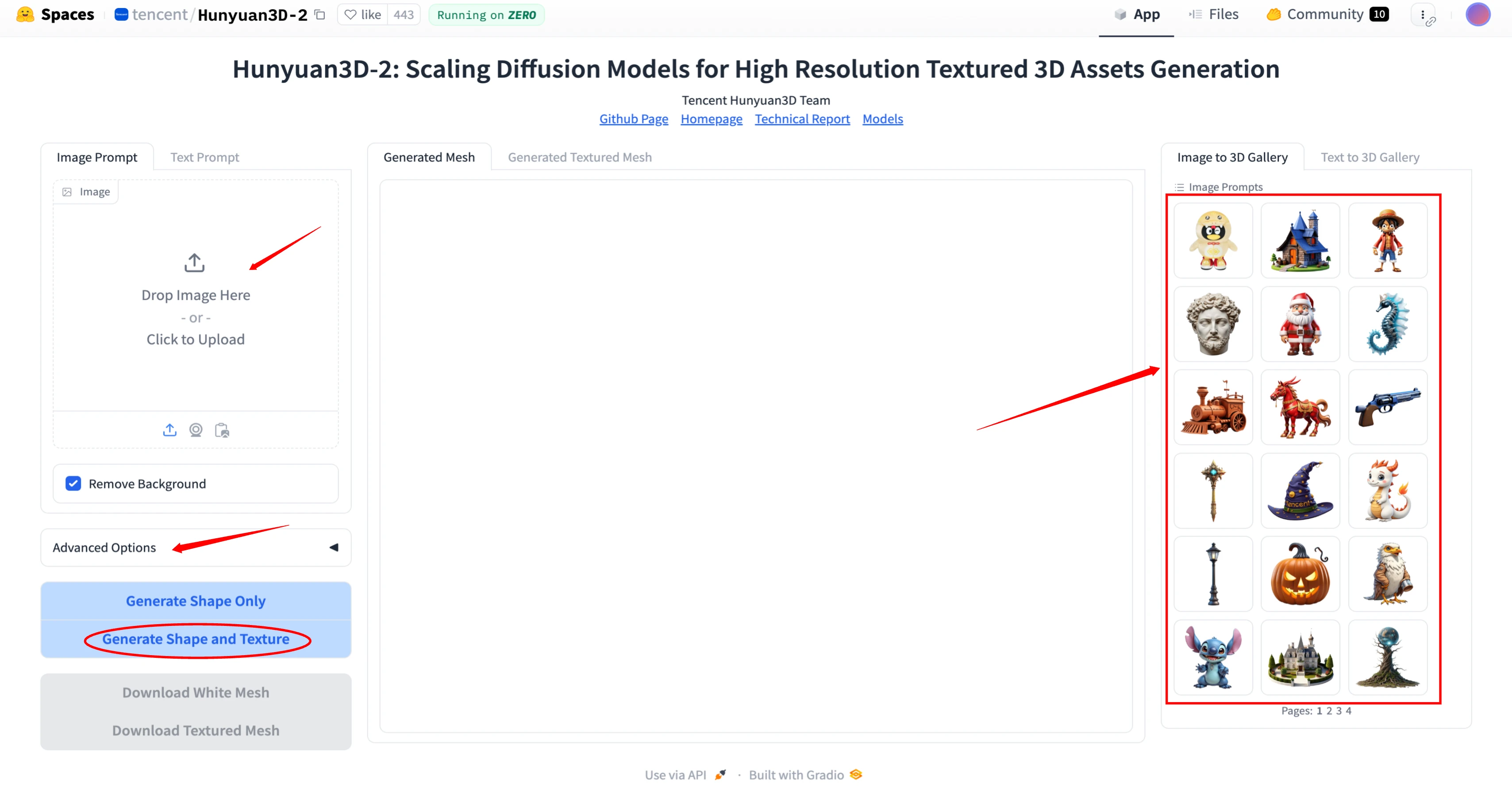

Here’s a concise step-by-step guide to using Hunyuan3D-2 on Hugging Face, based on the interface details from the image:

How to use Hunyuan3D-2 on huggingface?

Step 1: Access the Tool

- Visit the Hunyuan3D-2 Hugging Face Space: huggingface.co/spaces/tencent/Hunyuan3D-2

- You’ll see options for Image Prompt and Text Prompt.

Step 2: Choose Your Input Type

-

Option 1: Text Prompt

- Click the Text Prompt field.

- Enter a description (e.g., “a wooden chair with floral carvings”).

-

Option 2: Image Prompt

- Click Drop Image Here or Click to Upload to add a reference image.

- Enable Remove Background if your image has a distracting background.

Step 3: Configure Advanced Settings (Optional)

- Click Advanced Options to adjust parameters like:

- Mesh resolution.

- Texture detail level.

- Background removal strength (if enabled).

Step 4: Generate the 3D Model

- Select your output type:

- Generate Shape Only: Creates the 3D mesh without textures.

- Generate Shape and Texture: Produces a fully textured 3D model.

- Click Generate to start the process.

Step 5: Review and Download

- After generation, preview the results:

- White Mesh: View the untextured 3D shape.

- Textured Mesh: See the final model with applied colors/details.

- Download your files:

- Download White Mesh: For the base 3D structure.

- Download Textured Mesh: For the complete model.

Additional Features

- Gallery: Explore the Image-to-3D or Text-to-3D galleries for inspiration.

- API Access: Use Built with Gradle to integrate Hunyuan3D-2 into your workflow programmatically.

Tips for Better Results

- Use descriptive text prompts for clearer outputs.

- For image inputs, ensure the subject is centered and well-lit.

- Experiment with Advanced Options to refine complex models.

Key Features of Hunyuan3D-2

Texture Customization

One of the standout features of Hunyuan3D-2 is its ability to apply different textures to the same 3D shape. For instance:

- You can start with a teapot shape and overlay various textures to see how it changes the appearance.

- Similarly, you can take a boot model and try different textures, such as brown leather or other materials, to see the results.

This flexibility makes it a valuable tool for designers and creators who want to experiment with different looks for their models.

Leaderboard Performance

Hunyuan3D-2 isn’t just impressive in theory—it’s also performing exceptionally well in practice. There’s a leaderboard for AI 3D model generators where users can blind-test different models. From these tests, Hunyuan3D-2 is currently ranked number one, even outperforming Microsoft’s Trellis, which is already a highly capable tool.

Open-Source Plan

Tencent has made Hunyuan3D-2.0 open-source, providing the following resources:

- Inference Code: The code required to run the models.

- Model Checkpoints: Pre-trained models for shape generation and texture synthesis.

- Technical Report: Detailed documentation on the system’s architecture and functionality.

- ComfyUI Integration: Plans to integrate Hunyuan3D-2.0 with ComfyUI.

- TensorRT Version: Optimized versions for TensorRT.

Accessibility and Open-Source Availability

Free to Try

One of the best things about Hunyuan3D-2 is that it’s free to try. Tencent has made it available on Hugging Face, a platform where users can test AI models. The interface is straightforward:

- You can choose to upload an image or enter a text prompt.

- The tool then generates the 3D model based on your input.

Open-Source Release

Tencent has also released Hunyuan3D-2 as an open-source tool. You can find the GitHub repository, which includes all the instructions for downloading and using the model. Additionally, there are plans to integrate it into Comfy UI, making it even more accessible for users.

Model Size and Hardware Requirements

The models used in Hunyuan3D-2 range from 1.3 billion to 2.6 billion parameters. While this might sound large, it’s relatively small compared to some large language models. As a result, you can run this tool on a medium-tier GPU, making it accessible to a wider audience.

Demonstration of Hunyuan3D-2.0

Hunyuan3D-2.0 is an upgrade from its previous version and allows users to create 3D models from text prompts or images. Here’s how it works:

- Input: Provide a text description or upload an image.

- Shape Generation: The system uses a diffusion transformer to generate a 3D shape from the input.

- Texture Creation: A texture is created for the 3D shape.

- Combination: The shape and texture are combined to produce a complete 3D model.

This decoupled approach allows users to apply different textures to the same base shape, offering great flexibility. For example, you can overlay various textures on a teapot or a boot, as demonstrated in the video.

Performance and Leaderboard

Hunyuan3D-2.0 has been ranked number one on the AI 3D model generator leaderboard, outperforming even Microsoft’s tools. This ranking is based on blind tests conducted by users, highlighting the system’s superior performance.

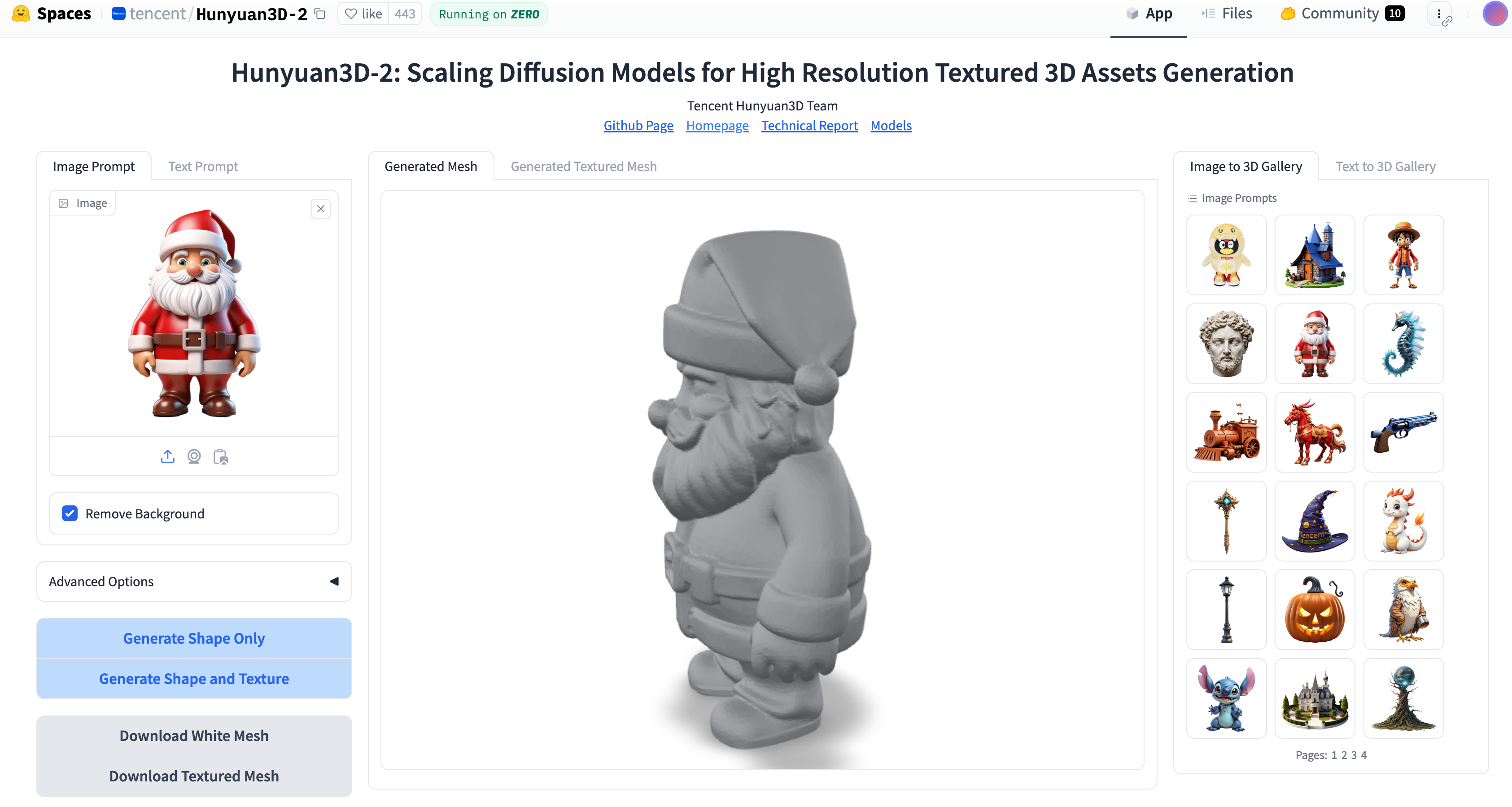

Testing Hunyuan3D-2.0

You can test Hunyuan3D-2.0 using the free Hugging Face space provided by Tencent. Here’s how:

- Select Input: Choose to either upload an image or enter a text prompt.

- Generate Model: The system will generate both the shape and texture for the 3D model.

For example, entering the prompt “a lovely rabbit eating carrots” produces a detailed and consistent 3D model, complete with texture. Similarly, uploading an image of a Gundam results in a highly detailed 3D shape, even estimating the back of the model accurately.

Conclusion

Tencent Hunyuan3D-2.0 is a powerful and flexible 3D asset creation system that decouples geometry and texture generation. Its two-stage pipeline, open-source availability, and superior performance make it a valuable tool for 3D artists and developers. If you’re generating models from text prompts or images, Hunyuan3D-2.0 delivers high-quality results with ease.

Related Posts

3DTrajMaster: A Step-by-Step Guide to Video Motion Control

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.

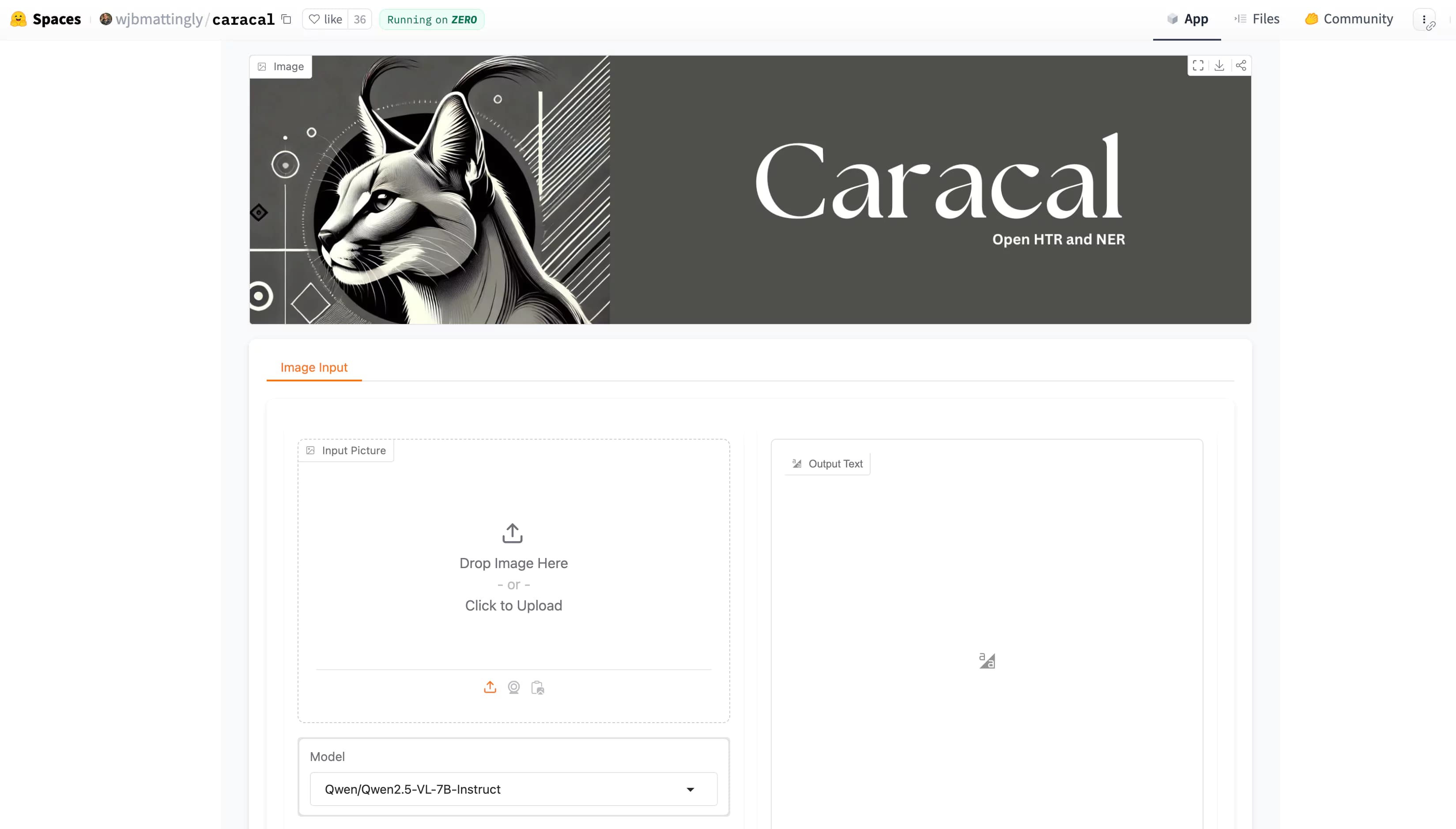

Caracal AI: Free Tool for Handwritten Text Recognition, Extract text from Images

Caracal is a text recognition project that has been widely cloned and fine-tuned by users for specific purposes. The project leverages advanced technology for text recognition tasks, as highlighted in the provided transcript snippet.

Browser-Use Free AI Agent: Now AI Can control your Web Browser

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.