RepVideo AI: Open-Source Video Generator with Improved Prompt

Table Of Content

- What is RepVideo?

- RepVideo Overview:

- Comparing RepVideo and CogVideo

- Example 1: Young Woman Playing a Grand Piano

- Example 2: White Vintage SUV on a Steep Road

- Example 3: Moonlike Object Approaching Earth

- Example 4: Tropical Fish in the Sea

- Example 5: Corgi Vlogging in Tropical Maui

- Measuring Video Consistency

- How RepVideo Achieves Better Consistency

- Installation Guide

- Step 1: Set Up the Environment

- Step 2: Download the Models

- Step 3: Run the Inference

- How RepVideo Improves Spatial Appearance

- Attention Difference Comparison

- Final Thoughts

In this article, I’ll walk you through the details of RepVideo, an enhanced open-source video generator that builds upon an existing model called CogVideo. RepVideo introduces significant improvements in prompt following and video consistency, making it a noteworthy advancement in the field of video generation.

What is RepVideo?

RepVideo is a completely free and open-source video generator based on CogVideo, an existing open-source model. However, the creators of RepVideo tweaked the architecture to achieve better prompt adherence and video consistency. This means that RepVideo can generate videos that more accurately reflect the input prompts and maintain higher consistency across frames.

RepVideo Overview:

| Detail | Description |

|---|---|

| Name | RepVideo - Enhanced Open-Source Video Generator |

| Purpose | AI-powered video generation with improved prompt following and consistency |

| Paper | RepVideo Paper on arXiv |

| GitHub Repository | RepVideo GitHub Code |

Comparing RepVideo and CogVideo

To understand the improvements RepVideo brings, let’s compare it with CogVideo using a few examples.

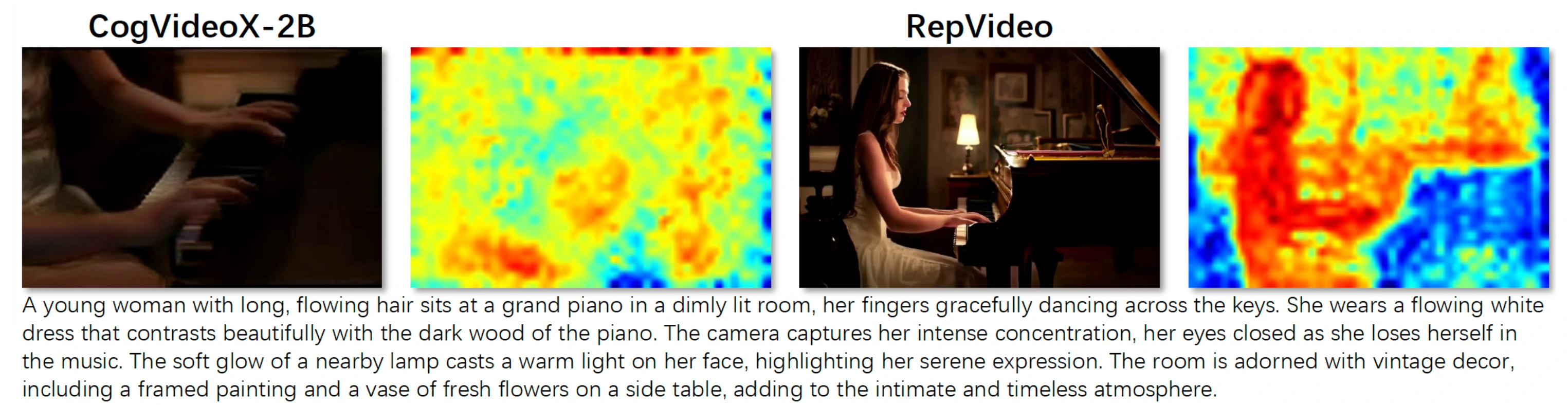

Example 1: Young Woman Playing a Grand Piano

Prompt: "A young woman with long flowing hair sits at a grand piano in a dimly lit room. Her fingers gracefully dance across the keys."

- CogVideo: Fails to generate the woman with long flowing hair sitting at a grand piano.

- RepVideo: Successfully generates the scene as described in the prompt.

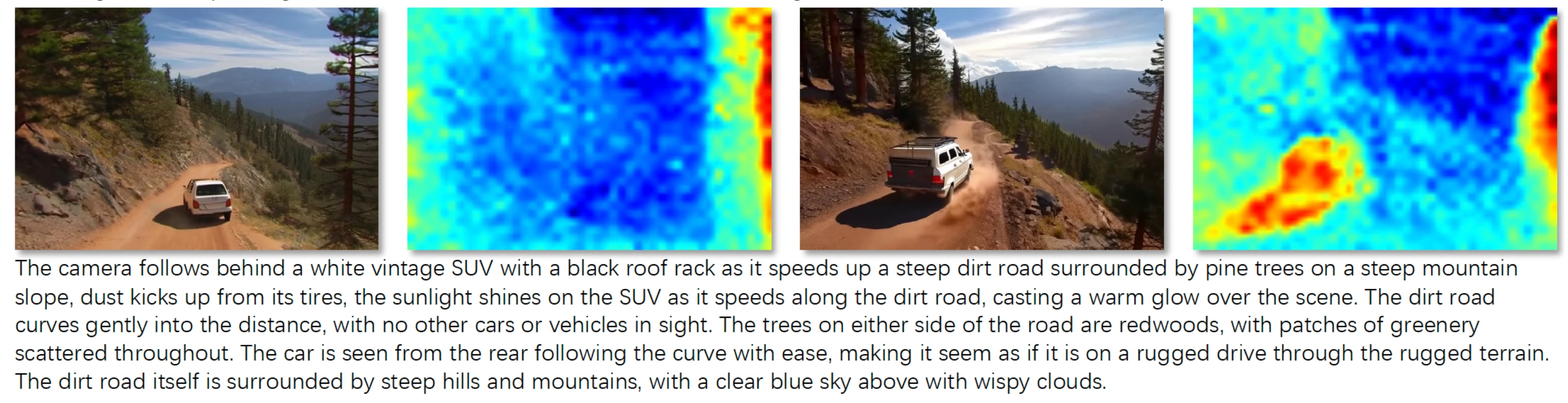

Example 2: White Vintage SUV on a Steep Road

Prompt: "The camera follows behind a white vintage SUV with a black roof as it speeds up a steep dirt road surrounded by pine trees."

- CogVideo: Does not generate a white SUV with a black roof.

- RepVideo: Accurately generates the SUV with a black roof, and the overall scene looks more like an SUV.

Example 3: Moonlike Object Approaching Earth

Prompt: "The video is a 3D animation of a moonlike object approaching the Earth. The moonlike object is gray with a rough texture."

- CogVideo: Does not generate the Earth or the moonlike object.

- RepVideo: Better understands the prompt and generates the scene as described.

Example 4: Tropical Fish in the Sea

Prompt: "Yellow and black tropical fish dart through the sea."

- CogVideo: Generates some yellow and black tropical fish, but the result lacks detail and realism.

- RepVideo: Produces a more realistic and detailed representation of the fish.

Example 5: Corgi Vlogging in Tropical Maui

Prompt: "A corgi vlogging itself in tropical Maui."

- CogVideo: Fails to generate a corgi; instead, the dog’s face transforms into a distorted, nightmarish creature.

- RepVideo: Successfully generates a corgi, adhering to the prompt.

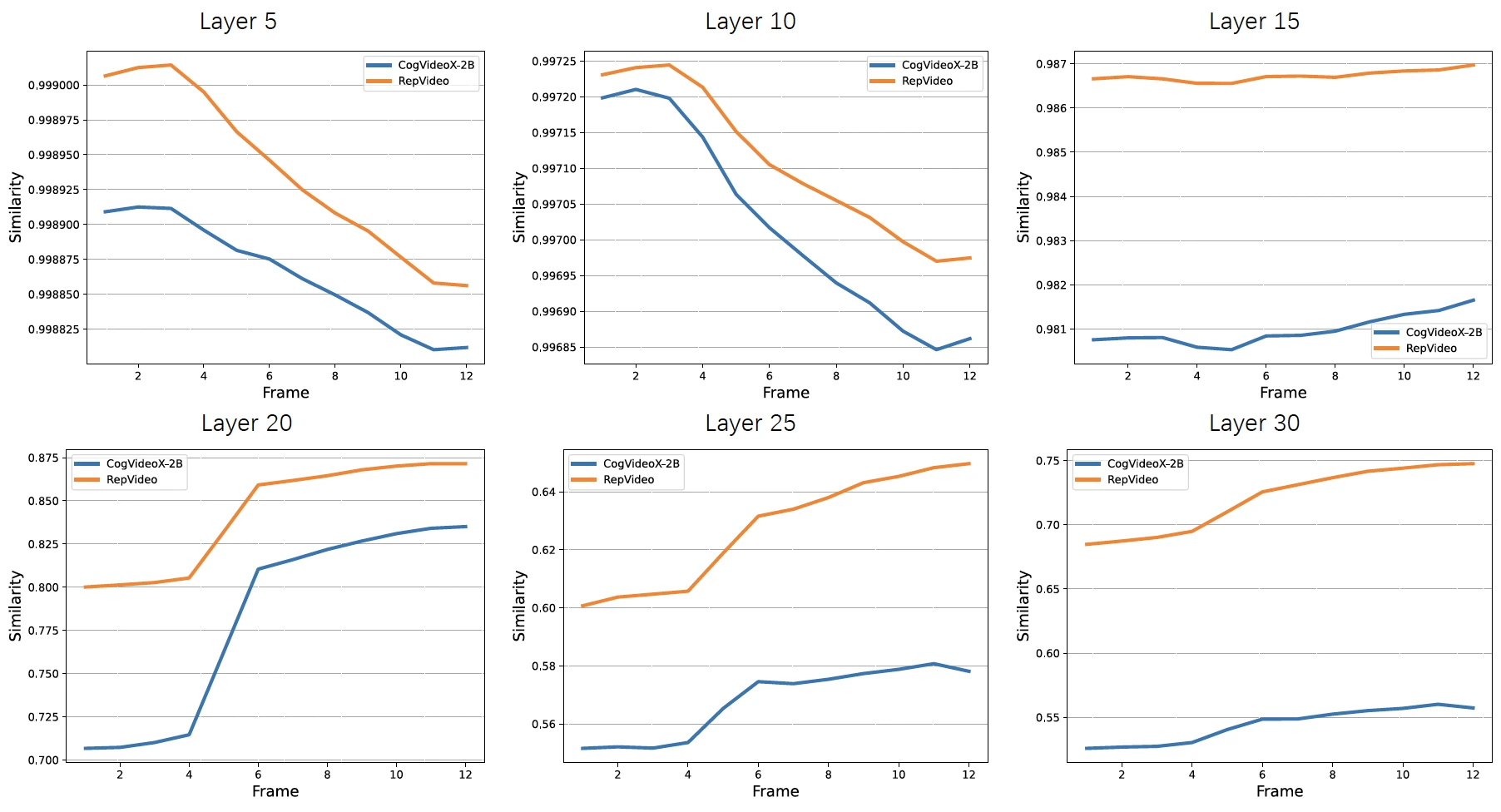

Measuring Video Consistency

To quantify the improvements, the creators of RepVideo used a metric called Y-AIS (similarity between frames), which measures video consistency.

- RepVideo (Orange Line): Consistently shows higher Y-AIS values across all charts.

- CogVideo (Blue Line): Has lower Y-AIS values, indicating less consistency.

This data confirms that RepVideo achieves higher video consistency compared to CogVideo.

How RepVideo Achieves Better Consistency

The key to RepVideo’s improved consistency lies in a technique called cross-layer representation. This technique examines different layers of the video, such as the background, objects, and movements, and ensures they work together smoothly.

By applying this technique to CogVideo, the creators were able to enhance its performance. However, it’s worth noting that CogVideo isn’t the best open-source video model available. Models like Hunan or Machi still outperform it in terms of quality.

This raises an interesting question: Can the cross-layer representation technique be applied to Hunan or Machi to make them even better? Let me know your thoughts in the comments.

Installation Guide

If you’re interested in trying out RepVideo, here’s how you can install and run it locally:

Step 1: Set Up the Environment

- Create a Conda environment:

conda create -n RepVid python==3.10 conda activate RepVid - Install the required packages:

pip install -r requirements.txt

Step 2: Download the Models

-

Create a directory for the checkpoints:

mkdir ckpt cd ckpt mkdir t5-v1_1-xxl -

Download the necessary files:

wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/text_encoder/config.json wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/text_encoder/model-00001-of-00002.safetensors wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/text_encoder/model-00002-of-00002.safetensors wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/text_encoder/model.safetensors.index.json wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/tokenizer/added_tokens.json wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/tokenizer/special_tokens_map.json wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/tokenizer/spiece.model wget https://huggingface.co/THUDM/CogVideoX-2b/resolve/main/tokenizer/tokenizer_config.json -

Set up the VAE (Variational Autoencoder):

cd ../ mkdir vae wget https://cloud.tsinghua.edu.cn/f/fdba7608a49c463ba754/?dl=1 mv 'index.html?dl=1' vae.zip unzip vae.zip

Step 3: Run the Inference

- Navigate to the

satdirectory:cd sat - Execute the script:

bash run.sh

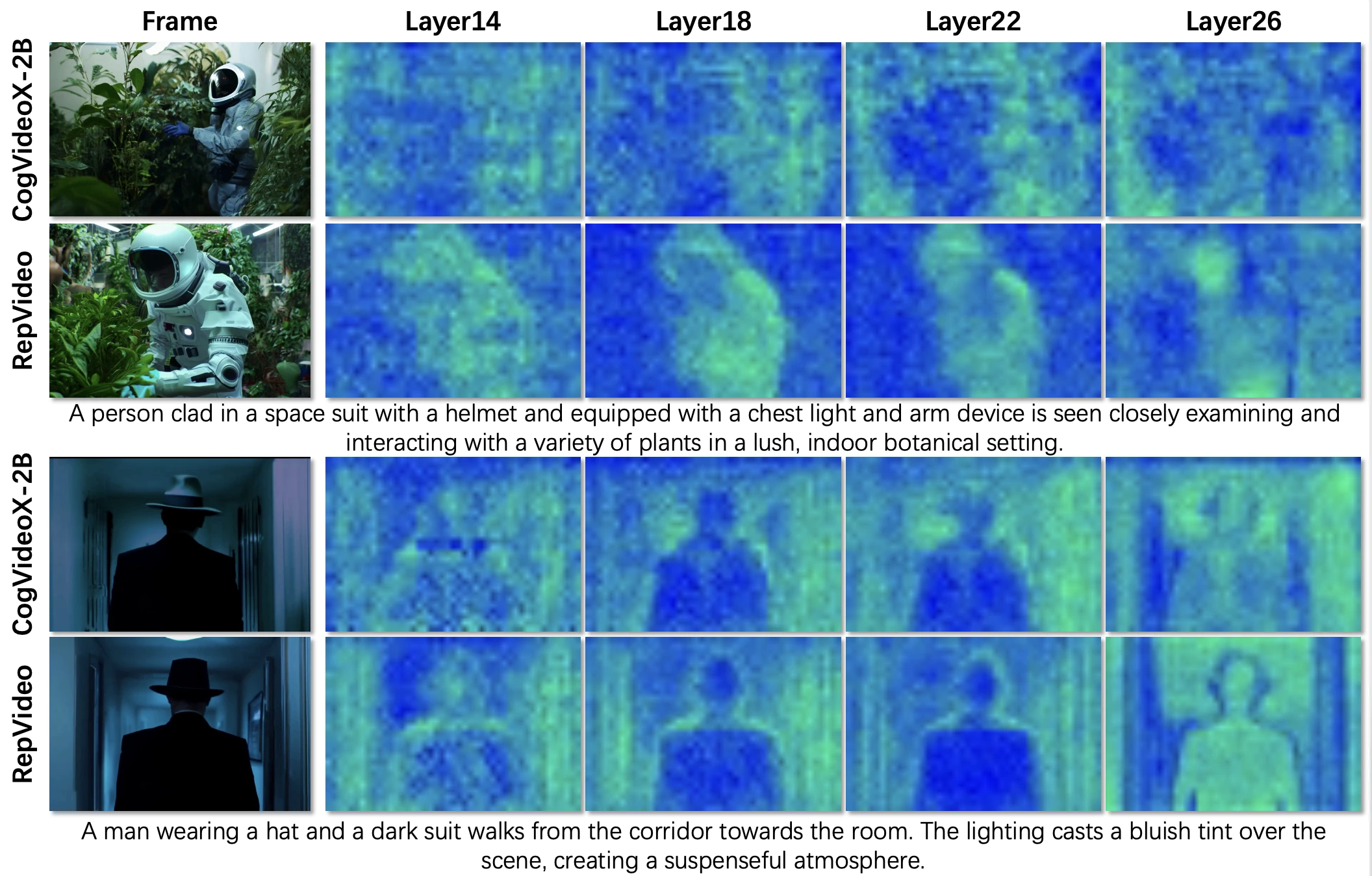

How RepVideo Improves Spatial Appearance

RepVideo’s framework includes a feature cache module and a gating mechanism to aggregate and stabilize intermediate representations. This approach enhances both spatial detail and temporal coherence.

Attention Difference Comparison

- RepVideo: Attention maps highlight subject boundaries more clearly, reducing inter-layer variability and preserving critical spatial information.

- CogVideo: Struggles to maintain consistent spatial details, leading to fragmented semantics.

This improvement allows RepVideo to generate visually consistent scenes that align more closely with input prompts.

Final Thoughts

RepVideo represents a significant step forward in open-source video generation. By improving prompt following and video consistency, it addresses some of the key limitations of existing models like CogVideo. While it may not yet match the quality of top-tier models like Hunan or Machi, its open-source nature and innovative techniques make it a valuable tool for researchers and developers.

If you’re interested in exploring RepVideo further, the code is already available on GitHub. Check out the link provided for detailed instructions on how to get started.

Related Posts

3DTrajMaster: A Step-by-Step Guide to Video Motion Control

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.

Caracal AI: Free Tool for Handwritten Text Recognition, Extract text from Images

Caracal is a text recognition project that has been widely cloned and fine-tuned by users for specific purposes. The project leverages advanced technology for text recognition tasks, as highlighted in the provided transcript snippet.

Browser-Use Free AI Agent: Now AI Can control your Web Browser

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.