DiffSplat AI: Fast 3D Model Generation from Text or Images

Table Of Content

- What is DiffSplat?

- DiffSplat Model Overview:

- How DiffSplat Works

- Input Options: Text or Image

- The Process Behind DiffSplat

- Examples of DiffSplat in Action

- Text-to-3D Model Generation

- Image-to-3D Model Generation

- Advanced Features of DiffSplat

- Estimating Normal Maps

- Extracting Depth Maps

- Extracting Edges

- Getting Started with DiffSplat

- Why DiffSplat Stands Out

- Final Thoughts

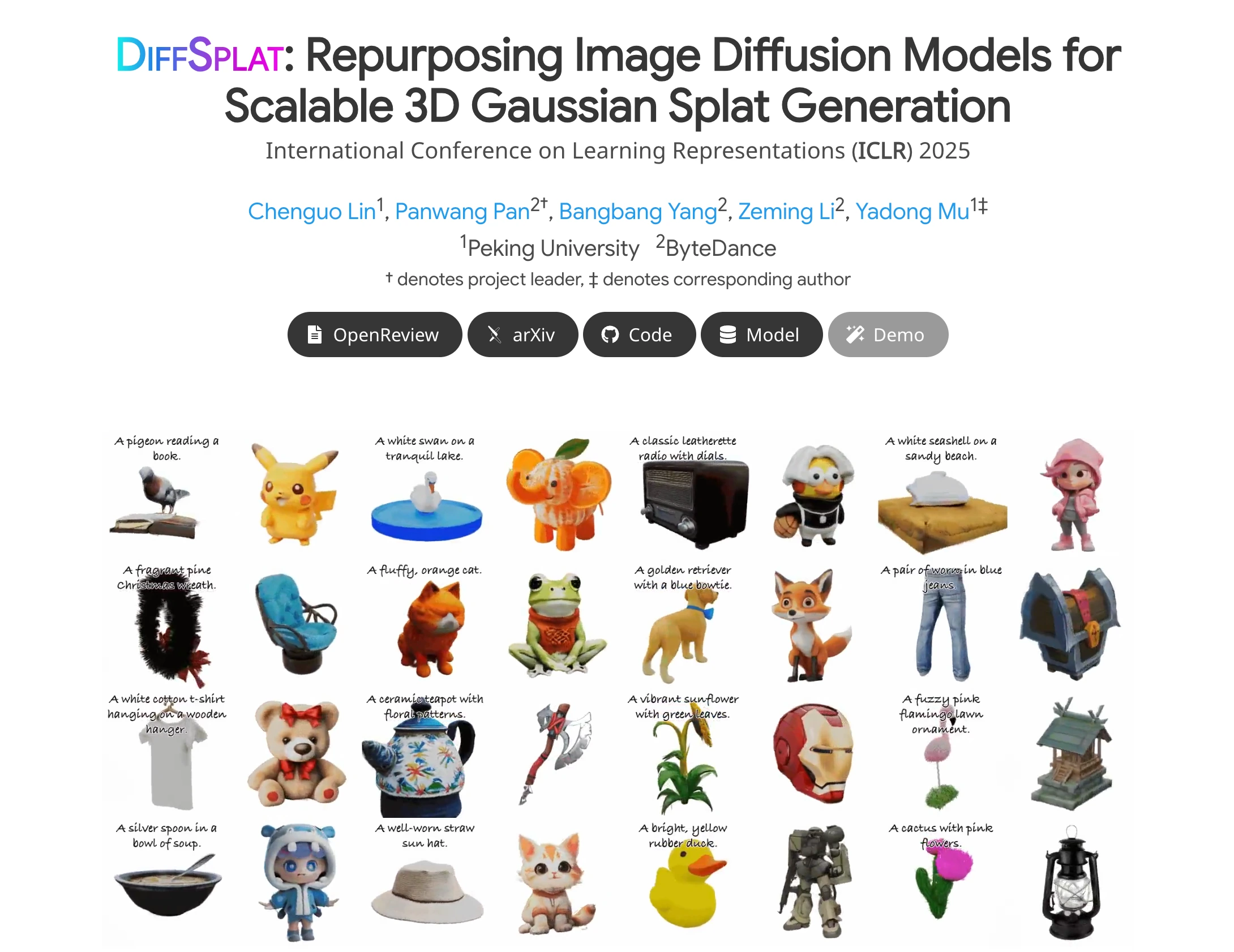

In this article, I’ll walk you through the 3d model generator tool called DiffSplat, a tool that generates 3D models from text prompts or images. The models are created in the form of what’s called a Gaussian Splat, which, in simple terms, is a cloud of tiny colored points in 3D space.

What’s even more impressive is how fast this process is—it only takes about 1 to 2 seconds to generate a model.

What is DiffSplat?

DiffSplat is a tool that allows you to create 3D models using either a text description or an image. The output is a Gaussian Splat, which is essentially a collection of small, colored points arranged in 3D space. This method is not only visually appealing but also incredibly efficient, as it generates models in just a couple of seconds.

DiffSplat Model Overview:

| Feature | Details |

|---|---|

| Model Name | DiffSplat |

| Functionality | Fast 3D model generation from text or images using Gaussian splats |

| GitHub Repo | DiffSplat GitHub Repo |

| GitHub Pages | DiffSplat GitHub Pages |

| Hugging Face Model | DiffSplat Model on Hugging Face |

| arXiv | arxiv:2501.16764 |

How DiffSplat Works

Input Options: Text or Image

DiffSplat accepts two types of inputs:

- Text Descriptions: You can describe the 3D model you want, and DiffSplat will generate it for you.

- Images: You can upload an image, and the tool will create a 3D model based on it.

The Process Behind DiffSplat

Here’s a step-by-step breakdown of how DiffSplat generates 3D models:

-

Input Processing:

- Whether you provide a text description or an image, DiffSplat plugs this input into a diffusion model. This diffusion model functions similarly to image generators like Stable Diffusion or Flux.

-

Image Generation:

- The diffusion model generates an image based on your input. One of the great features of DiffSplat is its flexibility—you can use different image generators such as SDXL, PixArt, or Stable Diffusion 3, depending on your preference.

-

Latent Decoder:

- The generated image is then passed through a latent decoder, which converts it into a 3D model.

-

3D Rendering Loss Component:

- To ensure the 3D model remains consistent from different angles, DiffSplat uses a 3D rendering loss component. This component provides coherence across various views, making the model look accurate and realistic no matter how you rotate or view it.

Examples of DiffSplat in Action

Text-to-3D Model Generation

DiffSplat excels at turning text descriptions into 3D models. For instance, if you describe a “steampunk robot,” the tool will generate a 3D model that matches your description. The results are often impressive, even for complex or detailed characters.

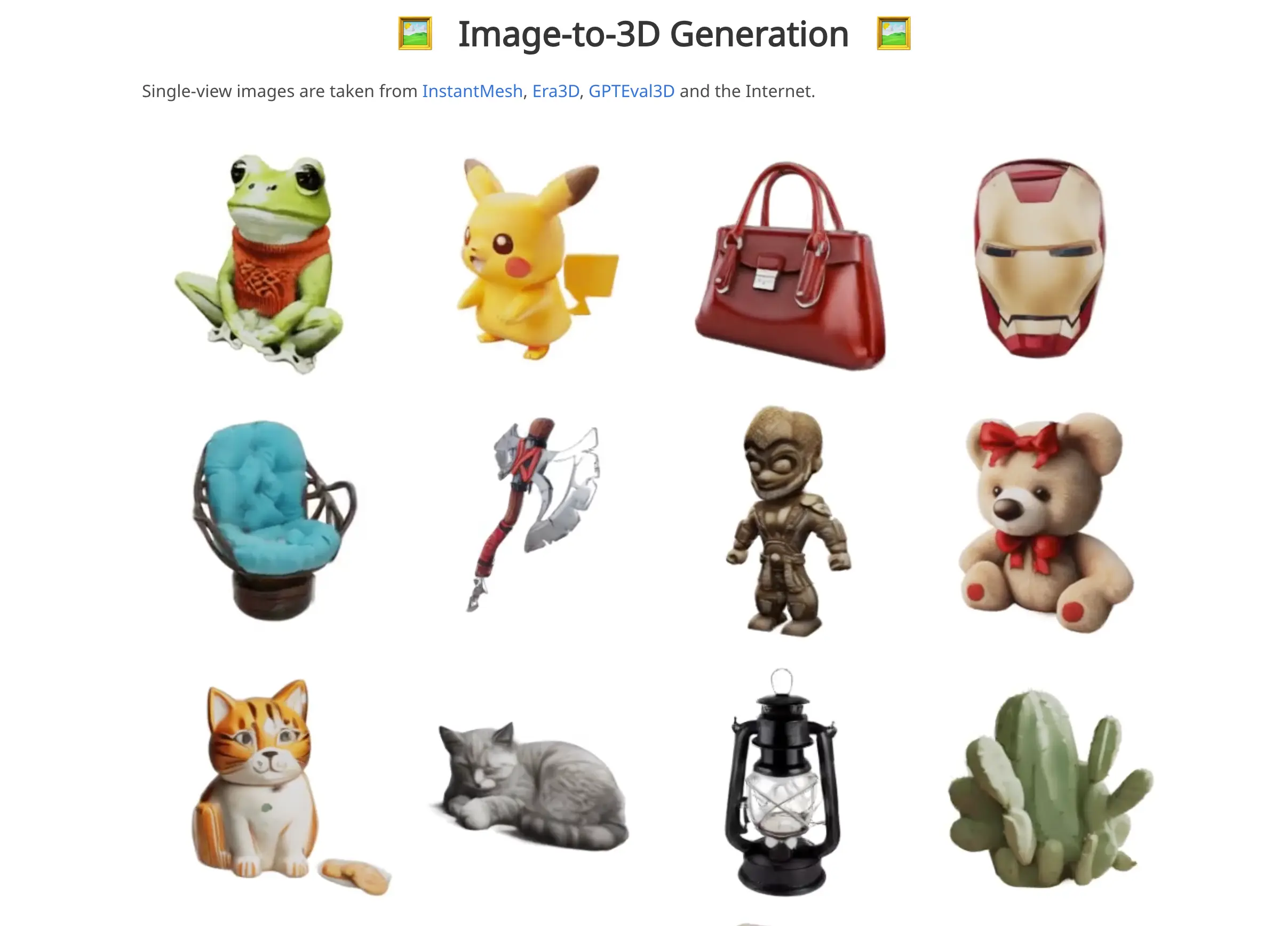

Image-to-3D Model Generation

You can also upload an image, and DiffSplat will create a 3D model based on it. Here are some key observations:

- Handling Complex Characters: Even if the uploaded character is highly detailed and intricate, DiffSplat manages to process it effectively.

- Estimating the Back of the Character: The tool can accurately predict what the back of the character would look like, ensuring a complete and consistent 3D model.

Advanced Features of DiffSplat

DiffSplat is not just limited to generating 3D models from text or images. It also offers several advanced features that make it a versatile tool for 3D modeling.

Estimating Normal Maps

A normal map is a way to estimate the surface details of an object. DiffSplat can take an original object and generate its normal map. Once you have this map, you can combine it with a new text description (e.g., “steampunk robot”) to create a completely new object based on the original surface details.

Extracting Depth Maps

Another powerful feature is the ability to extract a depth map from an object. A depth map provides information about the distance of the object’s surfaces from a viewpoint. You can use this depth map, along with a new text description, to generate fresh 3D models that align with the original object’s depth information.

Extracting Edges

DiffSplat can also extract the edges of an original object. By combining these edges with different text prompts, you can create new 3D models that align with the extracted edges. This feature opens up a wide range of creative possibilities.

Getting Started with DiffSplat

If you’re excited to try out DiffSplat, the good news is that it’s already available on GitHub. The repository includes all the instructions you need to install and run the tool locally.

Here’s what you’ll find in the GitHub repo:

- Installation Guide: Step-by-step instructions to set up DiffSplat on your system.

- Usage Instructions: Detailed explanations on how to use the tool for text-to-3D and image-to-3D generation.

- Customization Options: Information on how to integrate different image generators like SDXL, PixArt, or Stable Diffusion 3.

Why DiffSplat Stands Out

DiffSplat is a powerful and versatile tool for 3D modeling.

- Speed: It generates 3D models in just 1 to 2 seconds.

- Flexibility: You can use text descriptions or images as inputs, and even switch between different image generators.

- Coherence: The 3D rendering loss component ensures that the models remain consistent from all angles.

- Advanced Features: From normal maps to depth maps and edge extraction, DiffSplat offers a range of tools to enhance your 3D modeling experience.

Final Thoughts

DiffSplat is an incredible tool for anyone interested in 3D modeling. With the ability to generate models from text or images, and the option to customize the process using different image generators.

Related Posts

3DTrajMaster: A Step-by-Step Guide to Video Motion Control

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.

Caracal AI: Free Tool for Handwritten Text Recognition, Extract text from Images

Caracal is a text recognition project that has been widely cloned and fine-tuned by users for specific purposes. The project leverages advanced technology for text recognition tasks, as highlighted in the provided transcript snippet.

Browser-Use Free AI Agent: Now AI Can control your Web Browser

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.