DeepSeek V3 AI Model: How to use it to build Projects.

Table Of Content

- What is DeepSeek V3?

- Why Deepseek V3 Is Unique

- Performance and Cost Comparison

- Model Architecture: Mixture of Experts (MoE)

- Benchmarks That Matter

- Technical Details of DeepSeek V3

- Key Highlights

- Training Innovations

- Performance Benchmarks and Comparisons

- Context Window and Usability

- Ethical and Practical Considerations

- How to use Deepseek V3?

- Step 1: Set Up a New Project

- Step 2: Log Into the Deepseek Platform

- Step 3: Access Documentation and Copy API Code

- Step 4: Insert Your API Key

- Setting Up Payment and API Access

- Testing DeepSeek V3: Coding Capabilities

- Test 1: HTML Web Page with Interactivity

- Test 2: Text-to-Image Web Application

- Insights from Deepseek’s Technical Report

- Final Thoughts

Deepseek V3 represents a significant milestone in AI history, marking the first open-source AI model to outperform its closed-source counterparts. In this guide, I'll walk you through everything you need to know about Deepseek V3, including its standout features, api, huggingface, benchmarks, and how to start building projects with it.

What is DeepSeek V3?

DeepSeek V3 stands out as the first open-weight model that matches the performance of proprietary closed-source models. It excels not only in benchmark tests but also in independent external evaluations. From my own hands-on experience, it feels significantly different from other models currently available.

What’s truly remarkable is that this model was trained at a fraction of the cost that proprietary model providers typically incur. This article is divided into two main sections:

- Technical Details of DeepSeek V3

- My Testing Results: The Good and the Bad

Why Deepseek V3 Is Unique

Deepseek V3 has outperformed both GPT-4 and CLA 3.5 Sonet on various benchmarks. The most striking aspect is its affordability, offering comparable or superior performance at a fraction of the cost.

Performance and Cost Comparison

- Cost: $0.014 per 1 million tokens

- Benchmarks: Matches or exceeds leading models like GPT-4 while remaining significantly cheaper.

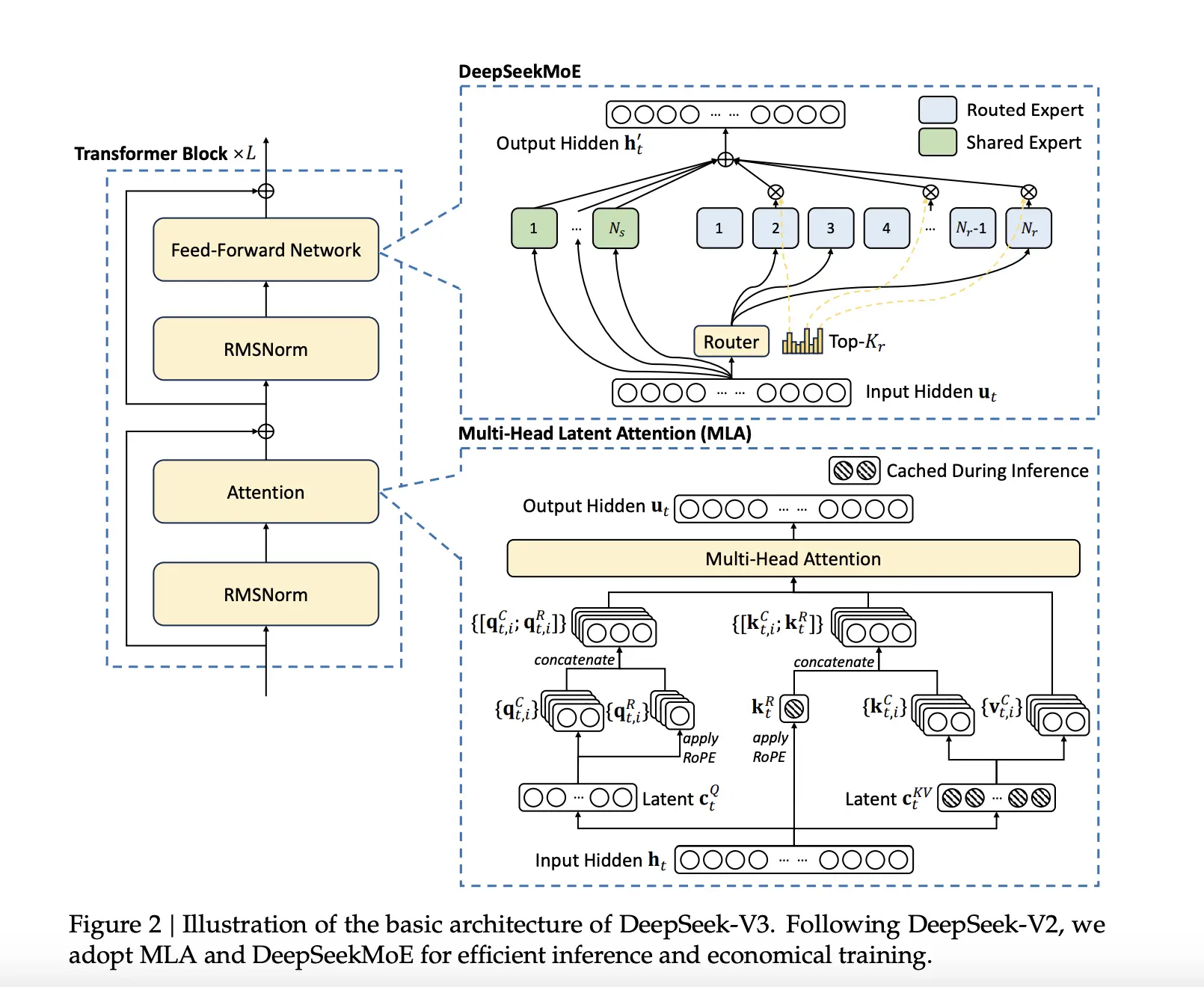

Model Architecture: Mixture of Experts (MoE)

Deepseek V3 employs a "Mixture of Experts" architecture. Instead of relying on one massive model, it comprises specialized smaller models, each excelling in specific tasks like mathematics, chemistry, or coding.

Benchmarks That Matter

Here are six benchmarks where Deepseek V3 demonstrates its superiority:

- MLU Benchmark: Matches or exceeds GPT-4 and CLA 3.5 Sonet.

- GPQ Diamond Benchmark: Handles PhD-level questions with only CLA 3.5 Sonet outperforming it.

- M500 Benchmark: Tops the chart, outperforming all competitors, including CLA 3.5 Sonet.

- Amy Benchmark: Recognized as the most ethical AI model.

- Codeforces Benchmark: Dominates in competitive programming tasks.

- SWE Verified Benchmark: Excels in software engineering, second only to CLA 3.5 Sonet.

Additionally, on the "Needle in a Haystack" problem, Deepseek V3 achieves a perfect 10/10 score, maintaining accuracy even with long prompts.

Technical Details of DeepSeek V3

Key Highlights

-

Unprecedented Open-Weight Performance

DeepSeek V3 has proven itself as the best open-weight model currently available, rivaling even proprietary models like Claude 3.5 and GPT-4.0 in benchmarks. It surpasses GPT-4.0 on several metrics and holds its own against some of the best in the industry. -

Massive Model Size

With over 600 billion parameters, DeepSeek V3 is a colossal model. Out of these, 37 billion parameters are actively used in each production run. -

Training Tokens and Cost Efficiency

The model was trained on 14.8 trillion high-quality tokens, with a total training cost of approximately $5.6 million—a fraction of the typical expenditure for training models of similar size.

Training Innovations

-

Hardware Used

The training was conducted on a 248-node H800 GPU cluster, which is bandwidth-constrained but still delivered impressive results. Despite lacking access to the more advanced H100 GPUs, the team managed to achieve stellar outcomes. -

Precision and Load Balancing

- Training employed an FP8 mixed-precision strategy, showcasing the feasibility of 8-bit floating-point precision for such a large model.

- Innovative load-balancing strategies and advancements in training algorithms were instrumental in overcoming computational constraints.

-

Multi-Token Prediction

Multi-token prediction further enhanced the model's efficiency during training.

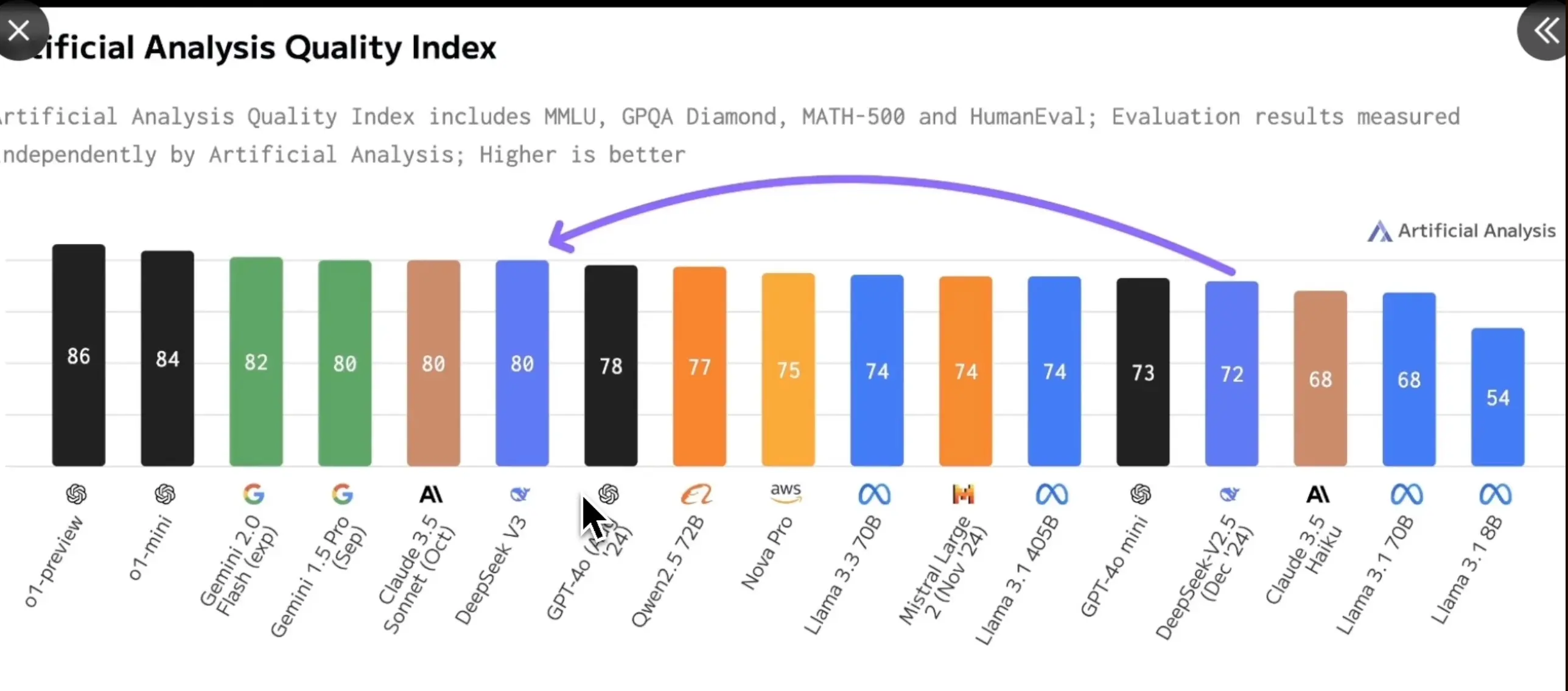

Performance Benchmarks and Comparisons

-

Against Proprietary Models

- Performs at par with Claude 3.5 Sonet.

- Outperforms GPT-4.0 on numerous benchmarks.

- Closely matches Gemini 1.5 Flash in terms of quality versus cost.

-

Independent Testing Results

Independent groups, such as Artificial Analysis and the creator of AER, have validated its capabilities. Notably, it performs better than Claude 3.5 Sonet and the Gemini experimental model in reasoning tasks.

Context Window and Usability

- 128,000 Tokens Context Window

DeepSeek V3 supports an extensive context window of up to 128,000 tokens, making it suitable for complex applications. - API and Deployment Options

The model can be accessed via APIs provided by the official DeepSeek platform or other hosting providers. For those with sufficient computational resources, it can also be run locally.

Ethical and Practical Considerations

While Deepseek V3's performance is groundbreaking, there are critical considerations:

- Data Oversight: As a Chinese company, Deepseek is subject to government regulations, which may allow access to data stored on its platform.

- Usage Restrictions: Although the model is open-source, its size (671 billion parameters) makes local deployment impractical without powerful hardware. Hosting is currently limited to the Deepseek platform.

How to use Deepseek V3?

Step 1: Set Up a New Project

- Open your preferred code editor (e.g., VS Code, Cursor).

- Create a new folder and file named

main.py.

Step 2: Log Into the Deepseek Platform

- Visit the login page for Deepseek.

- Tip: Avoid using your primary email and password for added security.

- Once logged in, navigate to the dashboard, where you’ll see information about the model’s discounted rates.

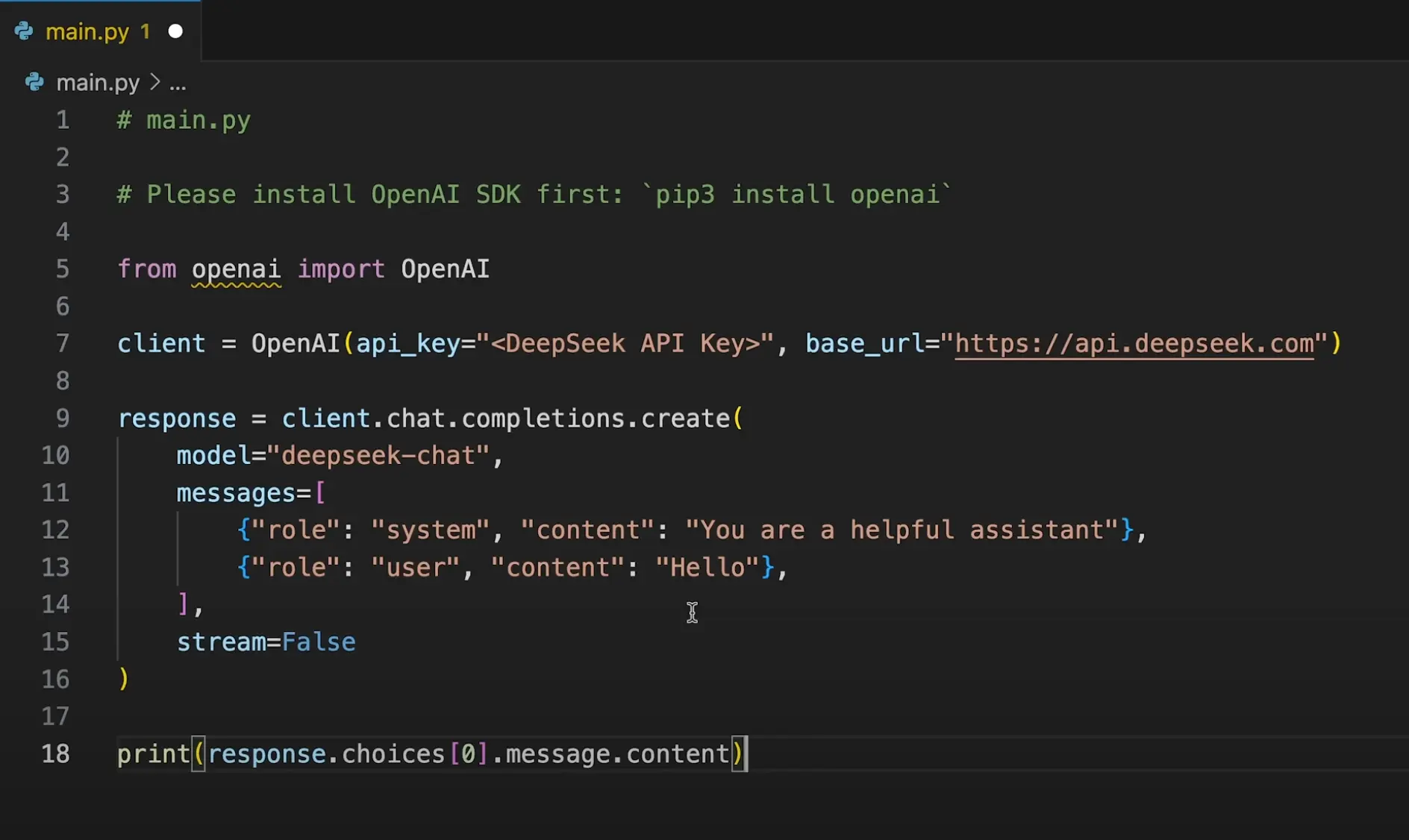

Step 3: Access Documentation and Copy API Code

- Visit the Deepseek documentation and locate the “Your First API Call” section.

- Copy the Python code provided in the documentation.

- The API uses OpenAI’s SDK, making it familiar to those who’ve worked with similar tools.

Step 4: Insert Your API Key

- Obtain your API key from the Deepseek platform.

- Paste the key into the designated area in your Python script.

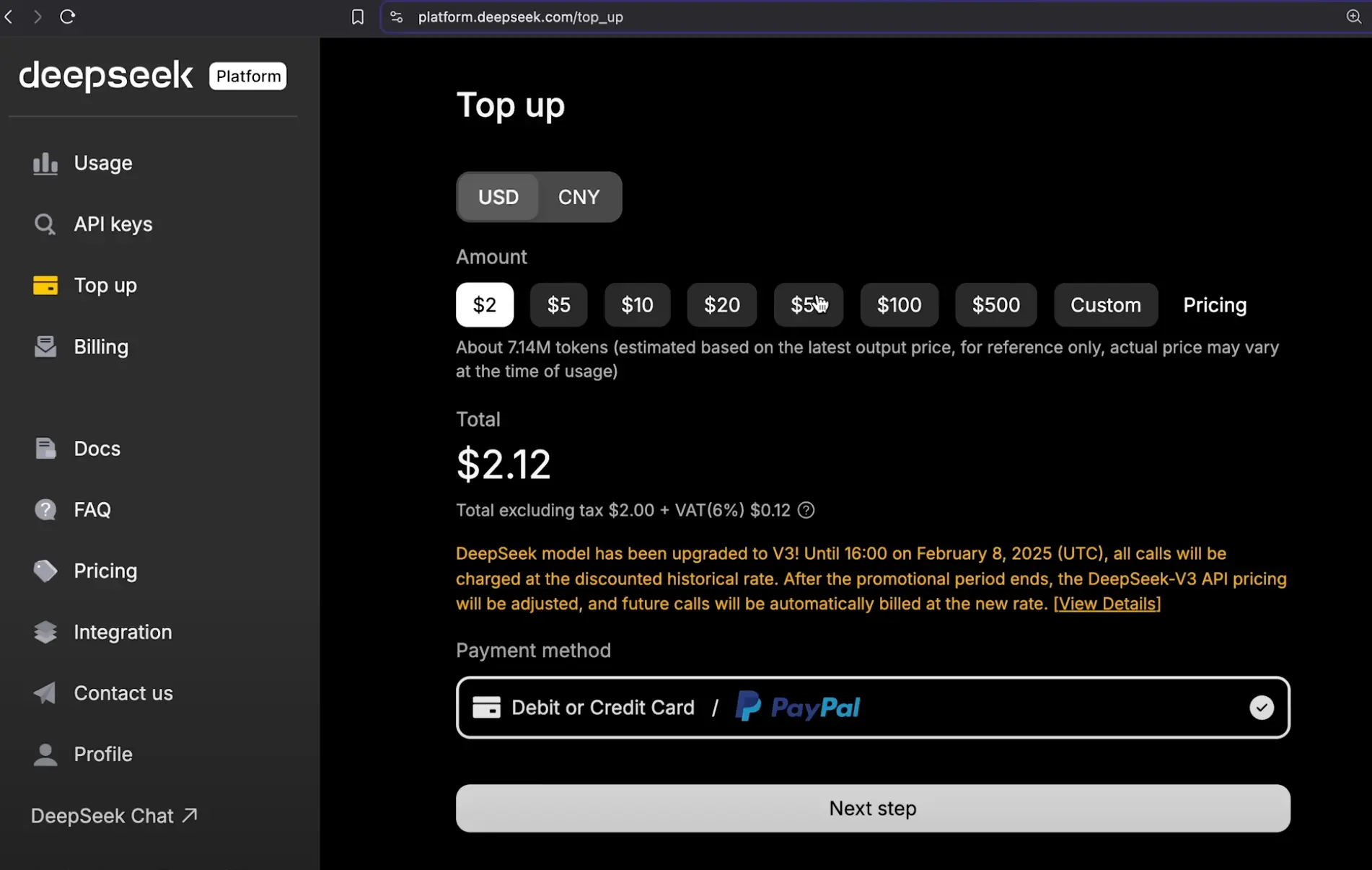

Setting Up Payment and API Access

To make API calls, you need to add a balance to your Deepseek account:

- Top Up Account: Add $2 via PayPal (recommended).

- Verify Balance: Refresh the dashboard to confirm your new balance.

Testing DeepSeek V3: Coding Capabilities

For my testing, I focused on coding and reasoning tasks. Here are the results:

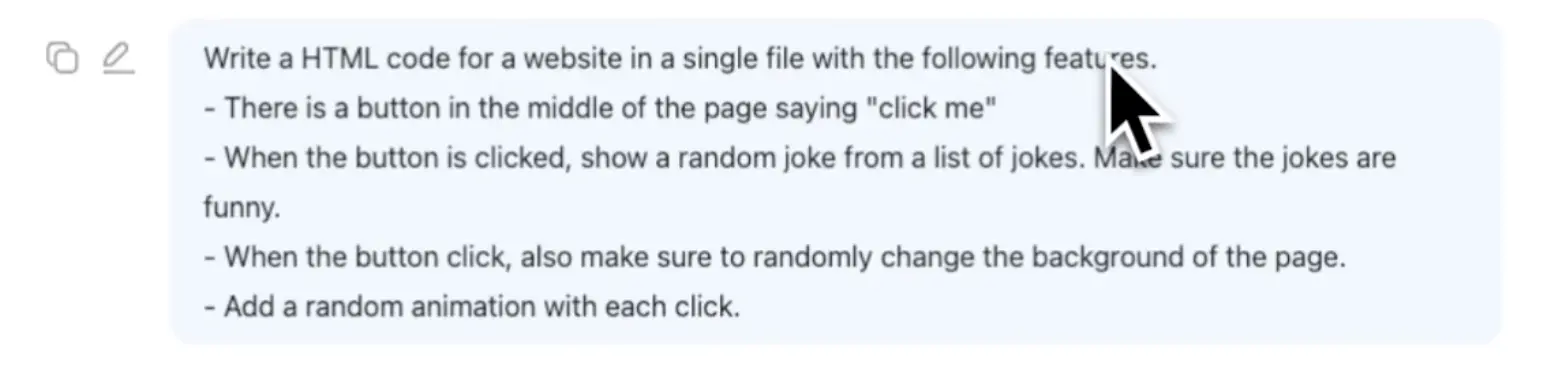

Test 1: HTML Web Page with Interactivity

I prompted the model to create a single HTML file with the following features:

- A button labeled "Click Me" that displays a random joke when clicked.

- Random background color changes with each click.

- An animation displayed alongside the joke.

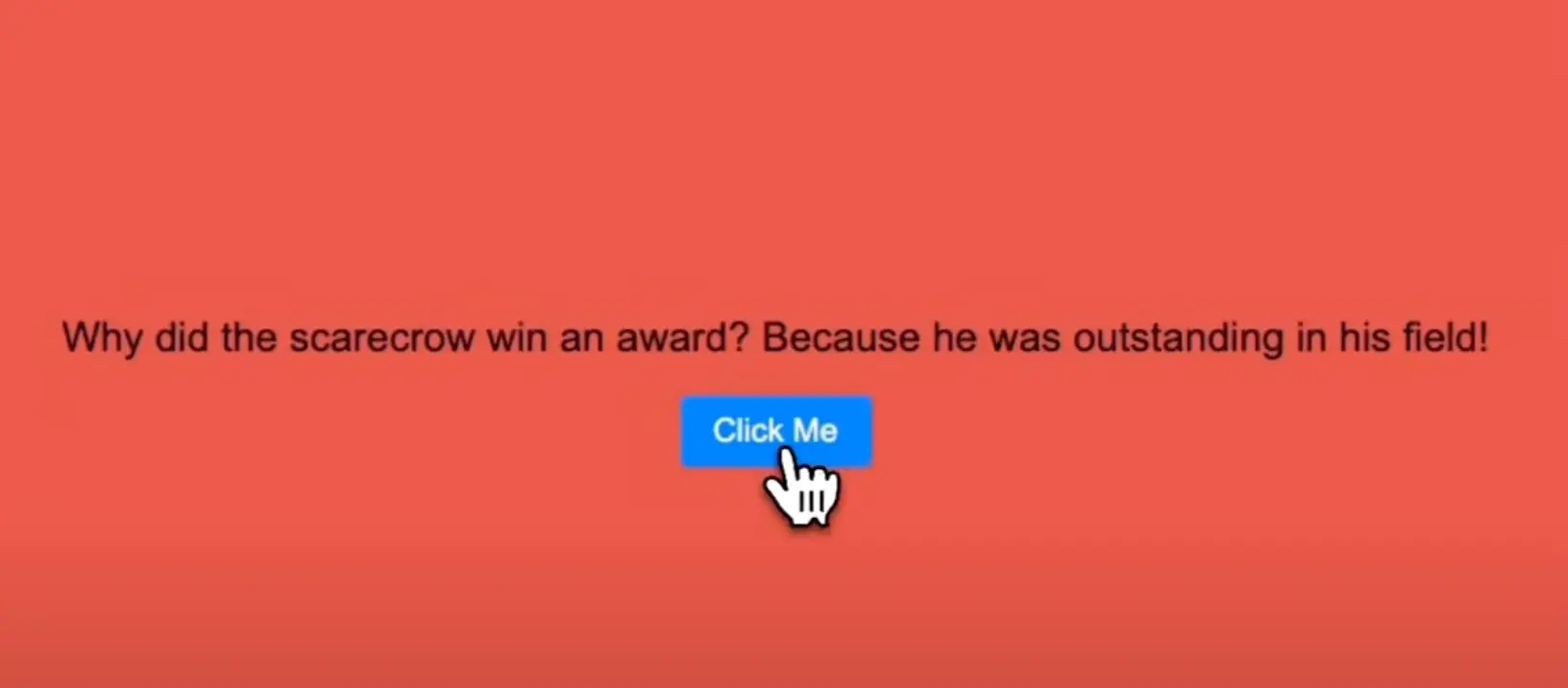

Outcome:

The generated HTML code successfully implemented all requested features. The jokes list was functional, though some were repetitive. When run locally, the page performed perfectly, with smooth animations and background changes.

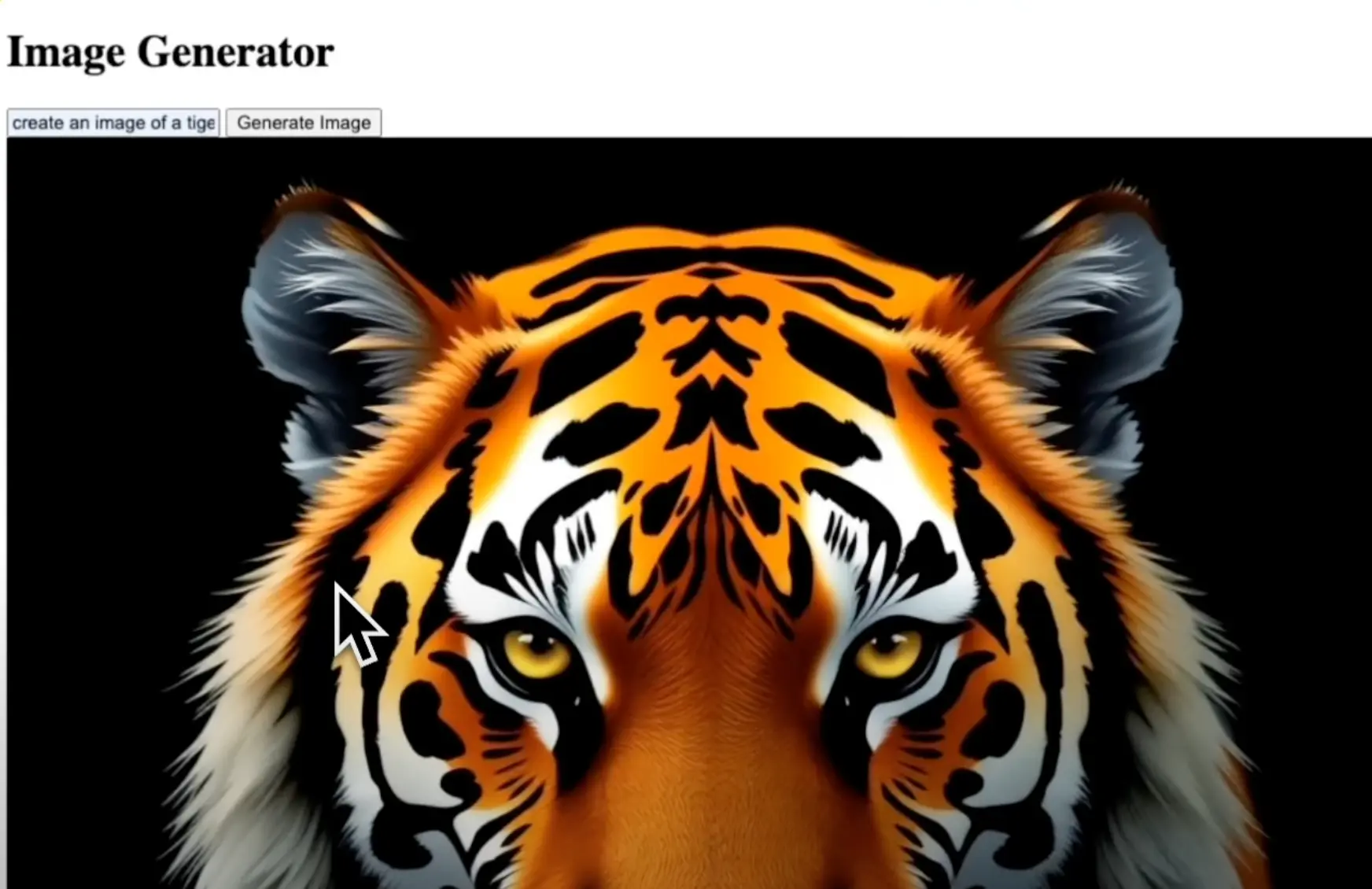

Test 2: Text-to-Image Web Application

The second test involved creating a web app that:

- Accepts a text description from the user.

- Generates an image using the Flux model API hosted on Replicate.

- Allows downloading or regenerating the image.

Key Requirements:

- Frontend in HTML and backend in Python.

- A project structure with an automated setup command.

Outcome:

DeepSeek V3 generated a well-structured project with clear instructions and a bash script for setup. The Python backend and HTML frontend were functional and integrated seamlessly with the external API.

Insights from Deepseek’s Technical Report

Deepseek V3’s technical report, available on GitHub, offers over 30 pages of in-depth knowledge. For a simplified version, refer to resources like the New Society platform, which provides easy-to-digest summaries.

Final Thoughts

Deepseek V3 is an extraordinary open-source model, offering unparalleled performance at minimal cost. While there are challenges, such as data privacy concerns and limited hosting options, the opportunities it provides for developers are immense.

Related Posts

3DTrajMaster: A Step-by-Step Guide to Video Motion Control

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.

Caracal AI: Free Tool for Handwritten Text Recognition, Extract text from Images

Caracal is a text recognition project that has been widely cloned and fine-tuned by users for specific purposes. The project leverages advanced technology for text recognition tasks, as highlighted in the provided transcript snippet.

Browser-Use Free AI Agent: Now AI Can control your Web Browser

Browser Use is an AI-powered browser automation framework that lets AI agents control your browser to automate web tasks like scraping, form filling, and website interactions.